Forget Quantum? Why Photonic Data Centers Could Arrive First

•January 30, 2026

0

Companies Mentioned

Alamy

Why It Matters

Photonic data centers could satisfy exploding AI workload demands while slashing power and space costs, reshaping data‑center economics ahead of quantum adoption.

Key Takeaways

- •Light‑based chips deliver faster, lower‑latency processing

- •Parallel optical operations boost AI training throughput

- •Energy use drops, easing cooling requirements

- •Higher compute density reduces rack footprint

- •Full‑scale photonic computers still years away

Pulse Analysis

The relentless growth of generative‑AI models has stretched traditional silicon‑based data centers to their limits, prompting operators to hunt for new compute paradigms. Photonic computing, which processes information with photons rather than electrons, builds on the fiber‑optic infrastructure already ubiquitous in modern facilities. By embedding linear‑algebra operations directly onto photonic integrated circuits, these chips can perform matrix multiplications—the workhorse of neural networks—without the resistive losses that throttle electronic transistors. This shift promises a fundamental leap in raw throughput while leveraging existing optical networking expertise.

Four technical virtues set photonics apart. First, photons travel at light speed, eliminating the capacitive bottlenecks that constrain electronic interconnects and slashing on‑chip latency. Second, the wave nature of light enables massive parallelism, allowing thousands of channels to operate simultaneously—a perfect match for AI training and inference workloads. Third, optical logic consumes far less power, translating into lower cooling loads and a smaller carbon footprint. Fourth, the dense packaging of waveguides and modulators can pack more compute per square millimeter, shrinking rack footprints. While quantum photonics still relies on fragile quantum states, photonic accelerators function as deterministic, classical devices, accelerating their path to deployment.

Despite promising lab results—such as ultra‑fast photonic memory demonstrated in late‑2025 and prototype neural‑network accelerators published in Nature—commercial‑grade photonic computers remain a few years out. Data‑center operators can prepare by revisiting rack geometry to exploit higher compute density and by upgrading internal networking to avoid bottlenecks once optical processors become mainstream. Early adopters stand to gain competitive advantage through reduced energy bills and the ability to scale AI services without expanding physical footprints. As the ecosystem matures, photonic data centers are poised to reshape the economics of high‑performance computing well before quantum machines achieve general‑purpose viability.

Forget Quantum? Why Photonic Data Centers Could Arrive First

Alamy

Photonic technologies are emerging as a realistic route to higher‑throughput, more energy‑efficient data centers – potentially well before general‑purpose quantum machines become mainstream. Optical technologies already form the backbone of high‑performance networking, and new photonic accelerators and components promise tangible gains in bandwidth, latency, and energy efficiency for AI workloads.

Here’s what that means, what’s real today, and how operators can prepare for photonic infrastructure.

What Is Photonic Computing?

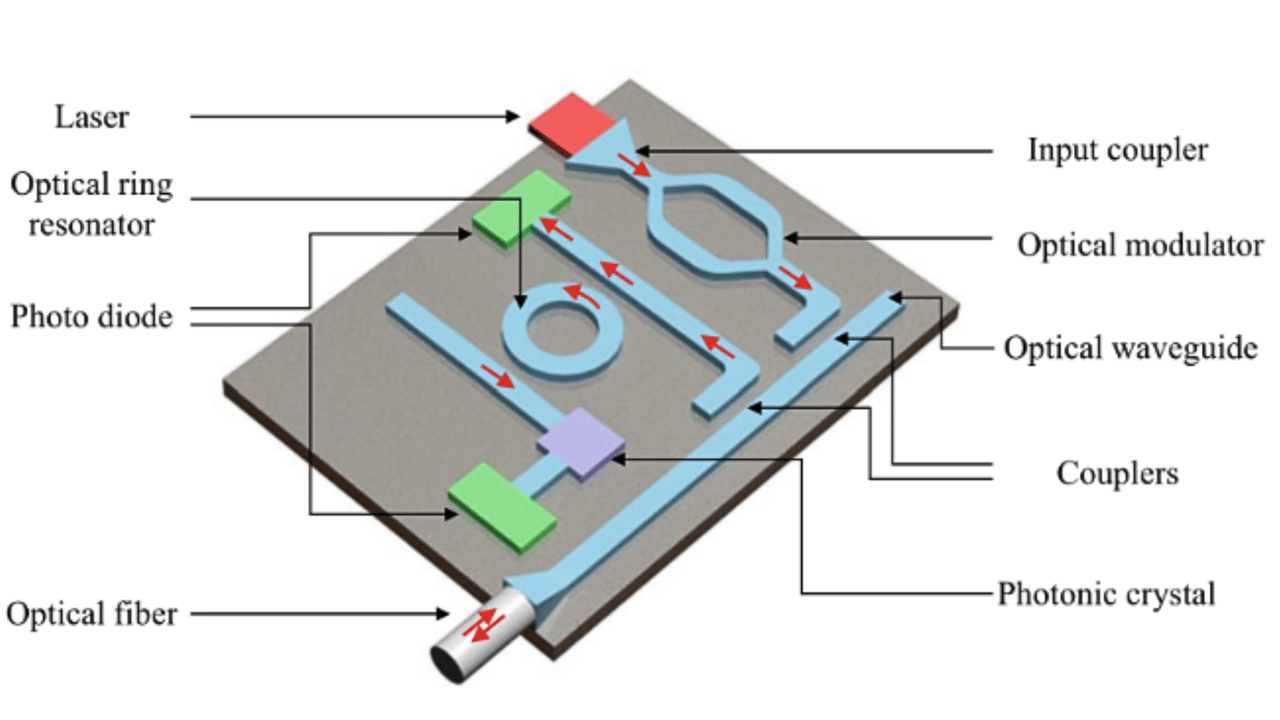

Photonics uses light to transmit and process data. Most data centers already rely on photonic technology for fiber‑optic networking. The newer development is computing with light using photonic integrated circuits (PICs), which execute operations (often linear algebra) directly in the optical domain.

This differs from:

-

Classical computing, which manipulates electrons on CMOS chips.

-

Quantum computing, which uses quantum mechanics. Some quantum systems use photons, but photonic computing does not require quantum effects and can be engineered to function much like specialized classical accelerators.

Related: How AI Distillation Rewrites Data Center Economics

Why Photonics Matter for Data Centers

When applied to computation, photonic systems offer four compelling advantages for data‑center workloads:

-

Speed and Bandwidth – Photons move at the speed of light and aren’t limited by the resistive and capacitive effects that constrain electrons to metal interconnects. This allows photonic computers to move data faster and reduce latency for on‑chip operations and chip‑to‑chip communication.

-

Parallelism – Photonic computers lend themselves well to large‑scale parallel processing, which is especially beneficial for AI training and inference, where parallelism accelerates throughput.

-

Energy Efficiency – Photonic computers consume less power than conventional electronic devices, easing the burden on data‑center cooling systems.

-

Compute Density – Photonics can pack more computing power into smaller chips, potentially leading to smaller devices and more efficient use of physical space in data centers.

Taken together, these advantages position photonic computing to address some of the industry’s toughest challenges – such as limited power availability and the constraints on scaling capacity for fast‑growing AI workloads. If photonics technologies mature as expected, operators may meet performance targets with fewer racks and lower energy budgets, reducing the need for additional data‑center space while improving efficiency.

Related: Data Center Bandwidth Soars 330%, Driven by AI Demand

Are Photonic Data Centers Practical?

While it’s easy to highlight the benefits of photonic computing, the real question is whether it’s ready for prime time. For now, the answer is a clear “not yet,” though momentum is building.

In late 2025, scientists announced the development of photonics‑based computer memory devices – a major step toward processing and storing data in photon form. Researchers have also made inroads in creating AI accelerators capable of executing neural network operations with light, and academic interest in photonics’ potential to transform computing continues to grow.

These developments don’t change the fact that no one has yet created a fully functional, general‑purpose photonic computer for real‑world deployment. However, they do suggest that practical photonic systems for data centers may be on the near horizon.

What Photonic Computing Means for Facility Design

If photonic computers begin entering data centers, the good news is photonic machines can generally use the same types of power and cooling systems as conventional computers.

That said, operators may want to plan for targeted adjustments, including the following:

-

Rethinking rack dimensions and layouts to capitalize on high compute density, since some photonic computers may require less physical space.

-

Improving internal network connections, since slower links could become the bottleneck when computation takes place at breakneck speeds.

It’s too early for operators to invest heavily in these changes today, but this guidance may shift later in the decade as photonic technologies mature.

Related: Data Center Milestones: From ENIAC to Generative AI

About the Author

Christopher Tozzi – Datacenter Knowledge author page

0

Comments

Want to join the conversation?

Loading comments...