Visual Intelligence Powering Next-Generation Robotics

•February 17, 2026

0

Why It Matters

By embedding metrology‑level perception into robots, manufacturers gain flexibility, reduce scrap and accelerate time‑to‑market for complex, low‑volume products. The shift also creates new value streams around data‑driven quality and adaptive automation.

Key Takeaways

- •Vision now provides sub‑millimeter measurement for robots

- •3D imaging enables real‑time geometry verification

- •AI-driven vision detects complex defects and learns over time

- •Inline vision controls processes, reducing scrap rates

- •Collaborative robots use vision for safety and dynamic workspaces

Pulse Analysis

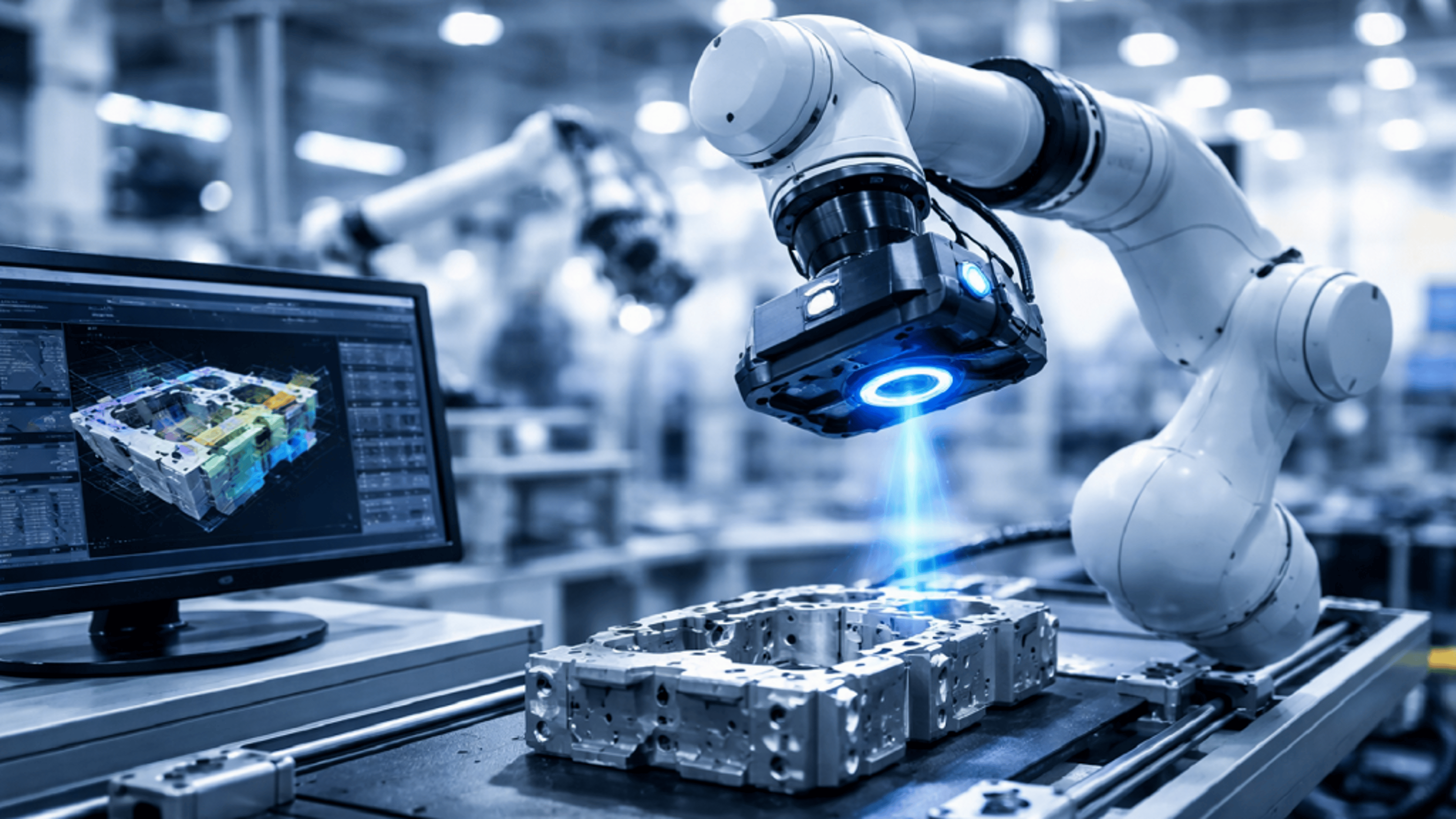

The rise of machine vision in robotics reflects a broader convergence of perception and action. Modern cameras deliver megapixel detail while structured‑light and time‑of‑flight sensors generate dense 3D point clouds. Coupled with edge AI, these data streams produce sub‑millimeter positioning and defect classification that rival traditional coordinate‑measuring machines. As a result, robots are no longer confined to pre‑programmed motions; they continuously measure, adjust and execute, delivering metrology‑grade accuracy on the shop floor.

Flexibility is the new competitive edge in manufacturing, especially for high‑mix, low‑volume production. Vision‑guided robots can locate randomly oriented parts, re‑orient them on conveyors, and adapt tool paths on the fly, eliminating costly fixturing. Industries such as electric‑vehicle battery assembly, aerospace composite fabrication, miniaturized electronics and precision medical devices are leveraging this adaptability to shorten changeover times and maintain tight tolerances despite part variability. Integrated with digital twins and manufacturing execution systems, vision data becomes a live thread that aligns design intent with actual production outcomes.

Despite its promise, deploying vision‑centric robotics faces practical hurdles. Variable lighting, reflective surfaces and high‑resolution data streams strain edge processors, while calibrating multi‑sensor arrays to traceable standards remains complex. Moreover, the workforce must blend optics, AI, data science and robotics expertise—a skill set traditionally siloed. Looking ahead, tighter fusion of vision with force and tactile sensing, self‑calibrating algorithms and globally shared AI models will further dissolve the boundaries between robotics, vision and metrology, cementing visual intelligence as the primary sense for next‑generation automation.

Visual Intelligence Powering Next-Generation Robotics

February 17, 2026 · Gerald Jones · Editorial Assistant

Machine vision has moved far beyond its early role as a digital replacement for human inspection. Today, it is becoming a core enabler of robotic intelligence, giving automated systems the contextual awareness, adaptability, and measurement capability required for high‑mix, high‑precision manufacturing.

As industrial robotics shifts from repetitive, pre‑programmed motion toward flexible, data‑driven decision‑making, machine vision is emerging as the sensory backbone that connects automation with metrology‑grade accuracy.

From Guidance to Measurement

Historically, vision systems in robotics were used primarily for presence detection, part localization, and basic pass/fail inspection. While these functions remain essential, they represent only a fraction of what modern vision technologies now contribute.

Advances in high‑resolution industrial cameras, 3D imaging and structured‑light systems, edge AI processing, and deep‑learning‑based defect recognition have elevated machine vision from a tool that simply “sees” into a system that effectively measures and controls. Robots no longer rely solely on fixed coordinates or mechanical fixturing. Instead, vision systems now deliver sub‑millimeter positioning data, real‑time dimensional verification, surface and geometric defect detection, and adaptive path correction.

In effect, vision is closing the gap between robotics and inline metrology, turning robots into mobile, measurement‑aware platforms.

Enabling Flexible Manufacturing

One of the defining pressures in modern manufacturing is the shift toward high‑mix, low‑volume production. Traditional robotic automation thrives on consistency, while variation introduces uncertainty. Machine vision is what allows robots to handle that variation.

Vision‑guided robotics enables systems to locate randomly oriented parts, correct orientation on conveyors, handle deformable or irregular components, and adjust tool paths based on part geometry. Rather than forcing parts to conform to automation constraints, vision allows automation to adapt to the parts themselves.

This flexibility is especially critical in industries such as automotive, where EV battery assemblies and lightweight structures introduce new geometries; aerospace, where composite parts and complex forms are common; electronics, where miniaturization pushes precision limits; and medical‑device manufacturing, where tight tolerances and product variability coexist.

3D Vision and the Rise of Spatial Awareness

The integration of 3D machine vision represents one of the most transformative developments in robotic automation. Technologies such as laser triangulation, stereo vision, time‑of‑flight sensing, and structured light are giving robots a form of spatial perception that was once limited to coordinate‑measuring systems.

With 3D vision, robots can measure part geometry before processing, compensate for tolerances in real time, verify assembly fit during operation, and detect warpage or deformation. This enables true closed‑loop control, where measurement data continuously informs motion, force, and positioning.

Instead of operating on a “move and hope” basis, robotic systems increasingly follow a measure‑adjust‑execute model, where sensing and action are tightly coupled.

AI Is Changing What Vision Can Understand

Traditional vision systems relied heavily on rules‑based algorithms that performed well in structured environments but struggled with variability. Modern systems are increasingly powered by deep learning, enabling them to detect complex and subtle patterns that were previously difficult or impossible to define programmatically.

AI‑enhanced vision allows robots to identify cosmetic defects, variability in natural or composite materials, surface‑texture anomalies, and context‑dependent assembly deviations. These systems are not static; they can improve over time. When integrated with manufacturing data platforms, machine vision becomes part of a continuous‑improvement loop rather than a standalone inspection step.

This evolution also introduces new metrology considerations. Explainability of AI‑driven decisions, validation of models, and traceability of inspection data are becoming as important as raw detection performance, particularly in regulated or safety‑critical industries.

Inline Metrology and Process Control

Machine vision is increasingly used not only to verify finished outcomes but to control processes in real time. Vision systems guide robotic welding through seam tracking, adjust adhesive bead placement based on observed geometry, correct machining paths according to measured stock variation, and monitor additive‑manufacturing layers as they are built.

This marks a clear transition from inspection after the fact to quality assurance during the process itself. In this context, vision data feeds directly into manufacturing execution systems, digital twins, and statistical‑process‑control platforms, making it an integral part of the digital thread that connects design intent to production reality.

Human–Robot Collaboration and Safety

Machine vision also plays a central role in collaborative robotics. Vision systems support workspace monitoring, human‑presence detection, and dynamic speed‑and‑separation control, enabling robots to operate safely alongside people.

Beyond safety, vision helps robots understand shared workspaces and handoff conditions, supporting hybrid workflows where humans perform judgment‑based or dexterous tasks while robots deliver precision, strength, and repeatability.

Challenges Ahead

Despite its expanding role, machine vision in robotics faces significant technical and operational hurdles. Industrial environments present lighting variability, reflective or transparent surfaces, and other optical challenges. Processing large volumes of high‑resolution data at edge speeds remains demanding, particularly when 3D data and AI inference are involved.

There are also metrology‑specific concerns, such as calibrating multi‑sensor systems to traceable standards and integrating vision data into legacy automation architectures. At the same time, workforce skills must evolve. Engineers increasingly need expertise that spans optics, AI training, data science, robotics, and precision measurement, disciplines that have traditionally operated in silos.

Machine Vision as a Core Robotic Sense

The trajectory is clear: machine vision is evolving from a peripheral sensor into a primary robotic sense. Future developments are likely to include tighter fusion of vision with force and tactile sensing, self‑calibrating robotic systems, AI models trained across global manufacturing datasets, and vision‑driven adaptive fixturing or even fixtureless assembly.

As this convergence continues, the distinction between robotics, machine vision, and metrology will increasingly blur. Precision will be enforced not only through hardware tolerances but through continuous measurement intelligence embedded within automated systems. Machine vision is no longer just helping robots see—it is enabling them to measure, decide, and adapt in real time.

In precision manufacturing, that capability is rapidly becoming indispensable.

0

Comments

Want to join the conversation?

Loading comments...