Transformers V5: Simple Model Definitions Powering the AI Ecosystem

•December 1, 2025

0

Companies Mentioned

Why It Matters

The upgrade accelerates AI development by lowering integration friction and scaling training/inference, positioning Transformers as the de‑facto standard for LLM ecosystems.

Key Takeaways

- •3 M daily installs, up from 20k in v4

- •Model architectures grew from 40 to over 400

- •750k+ checkpoints on Hub, up from ~1k

- •Modular design reduces contribution code lines dramatically

- •New APIs add continuous batching and OpenAI‑compatible serving

Pulse Analysis

Transformers has become the backbone of modern AI workflows, and its v5 release reflects the platform’s meteoric adoption. With more than 3 million installations per day, the library now powers a vast majority of LLM‑based applications, from research prototypes to production services. This scale is underpinned by a ten‑fold increase in supported model families, expanding from a modest 40 architectures in v4 to over 400 today, and a thriving community that contributes over three‑quarters of a million model checkpoints on the Hugging Face Hub.

Version 5’s engineering focus is on simplicity and modularity. By consolidating attention mechanisms into a shared interface and streamlining tokenization to a single backend, the codebase has shed unnecessary complexity, making it easier for contributors to add new models with fewer lines of code. The decision to retire Flax and TensorFlow support in favor of a PyTorch‑centric strategy further concentrates development effort, while still collaborating with JAX‑based tools to ensure cross‑framework compatibility. These changes lower the barrier for both academic and enterprise teams to adopt and extend the library.

On the training and inference fronts, v5 introduces robust pre‑training support compatible with large‑scale systems like torchtitan, megatron, and nanotron, alongside refined fine‑tuning pipelines that integrate seamlessly with popular libraries such as Unsloth and Axolotl. New inference APIs—continuous batching, paged attention, and the "transformers serve" server—enable high‑throughput, OpenAI‑compatible deployments without sacrificing flexibility. Together, these enhancements position Transformers as the definitive model‑definition hub, driving faster innovation cycles and broader AI accessibility across industries.

Transformers v5: Simple model definitions powering the AI ecosystem

Published December 1, 2025

Authors: Lysandre (lysandre), Arthur Zucker (ArthurZ), Cyril Vallez (cyrilvallez), Vaibhav Srivastav (reach‑vb)

Transformers' version v4.0.0rc‑1, the initial release candidate for version 4, was released on November 19th 2020. Five years later, we now release v5.0.0rc‑0.

Today, as we launch v5, Transformers is installed more than 3 million times each day via pip – up from 20 000 /day in v4 🤯. Altogether, it has now surpassed 1.2 billion installs!

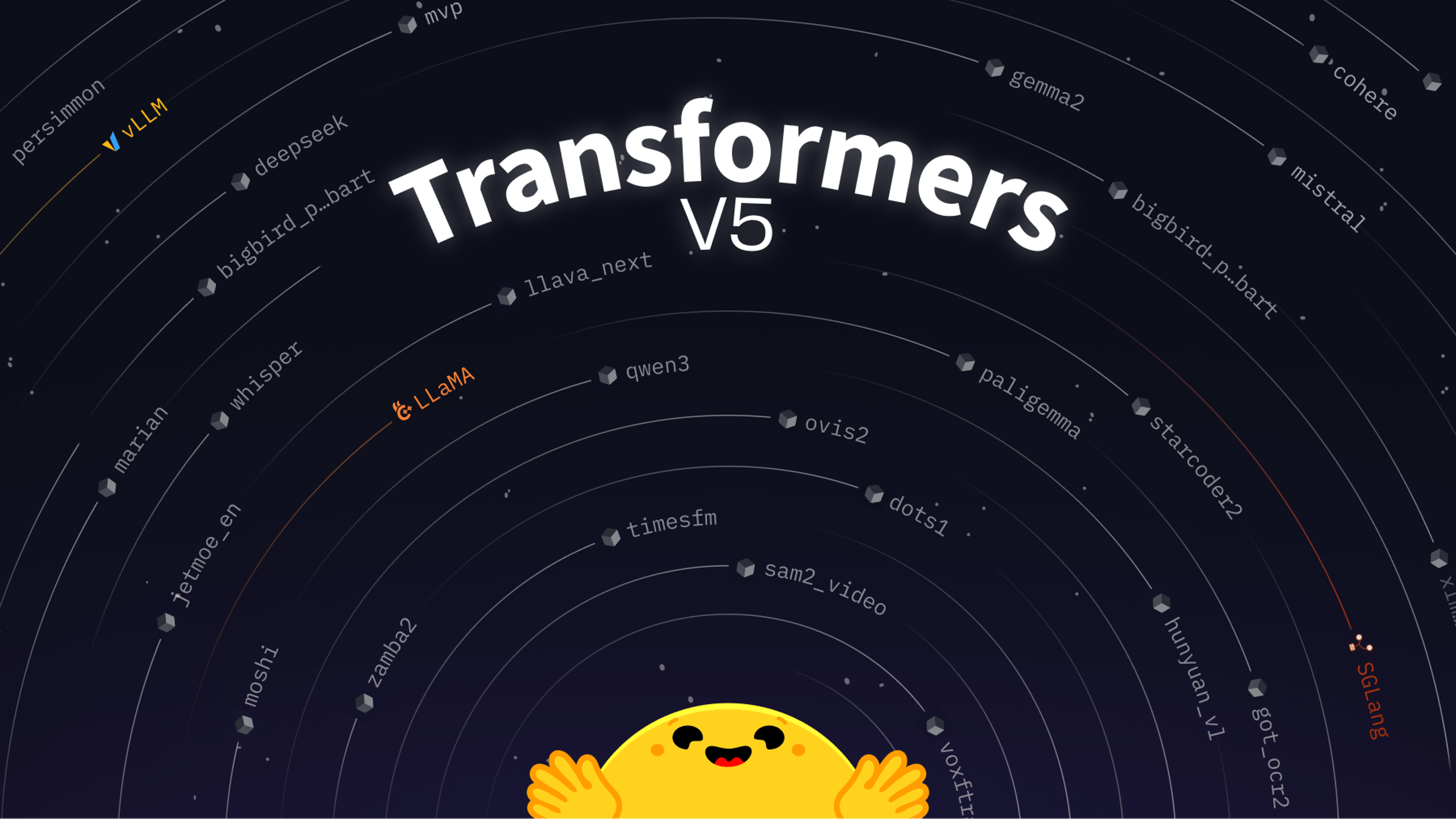

The ecosystem has expanded from 40 model architectures in v4 to over 400 today, and the community has contributed more than 750 000 model checkpoints on the Hub compatible with Transformers, up from roughly 1 000 at the time of v4.

This growth is powered by the evolution of the field and the now‑mainstream access to AI. As a leading model‑definition library in the ecosystem, we need to continuously evolve and adapt the library to continue being relevant. Reinvention is key for longevity in AI.

We’re fortunate to collaborate with many libraries and apps built on Transformers, in no specific order: llama.cpp, MLX, onnxruntime, Jan, LMStudio, vLLM, SGLang, Unsloth, LlamaFactory, dLLM, MaxText, TensorRT, Argmax, among many other friends.

For v5, we wanted to work on several notable aspects: simplicity, training, inference, and production. We detail the work that went into them in this post.

Simplicity

The first focus of the team was on simplicity. Working on Transformers, we see the code as the product. We want our model integrations to be clean, so that the ecosystem may depend on our model definitions and understand what’s really happening under the hood, how models differ from each other, and the key features of each new model. Simplicity results in wider standardization, generality, and wider support.

Model Additions

“Transformers is the backbone of hundreds of thousands of projects, Unsloth included. We build on Transformers to help people fine‑tune and train models efficiently, whether that’s BERT, text‑to‑speech (TTS), or others; to run fast inference for reinforcement learning (RL) even when models aren’t yet supported in other libraries. We're excited for Transformers v5 and are super happy to be working with the Hugging Face team!”

— Michael Han, Unsloth

Transformers, at the core, remains a model‑architecture toolkit. We aim to have all recent architectures and to be the “source of truth” for model definitions. We’ve been adding between 1 – 3 new models every week for five years, shown in the timeline below:

We’ve worked on improving that model‑addition process.

Modular Approach

Over the past year, we’ve heavily pushed our modular design as a significant step forward. This allows for easier maintenance, faster integration, and better collaboration across the community.

We give a deeper overview in our Maintain the Unmaintainable blog post. For brevity, we aim to achieve a much easier model‑contribution process, as well as a lower maintenance burden. One metric we can highlight is that the number of lines of code to contribute (and review) drops significantly when modular design is used.

While we respect the “One model, one file” philosophy, we continue introducing some abstractions making the management of common helpers simpler. The prime example of this is the introduction of the AttentionInterface, which offers a centralized abstraction for attention methods. The eager method will remain in the modeling file; others, such as FA1/2/3, FlexAttention, or SDPA, are moved to the interface.

“Over the past couple of years, the increasing amount of 0‑day support for new model architectures and standardization of attention handling has helped to simplify our support for post‑training modern LLMs.”

— Wing Lian, Axolotl

Tooling for Model Conversion

We’re building tooling to help us identify which existing model architecture a new model resembles. This feature uses machine learning to find code similarities between independent modeling files. Going further, we aim to automate the conversion process by opening a draft PR for the model to be integrated into our Transformers format. This reduces manual effort and ensures consistency.

Code Reduction

Streamlining Modeling & Tokenization/Processing Files

We’ve significantly refactored the modeling and tokenization files. Modeling files have been greatly improved thanks to the modular approach mentioned above, on top of standardization across models. Standardization abstracts most of the utilities that don’t belong to a model, so the modeling code only contains the relevant parts for a model’s forward/backward passes.

Alongside this work, we’re simplifying the tokenization and processing files: going forward, we’ll only focus on the tokenizers backend, removing the concept of “Fast” and “Slow” tokenizers.

We’ll use tokenizers as our main tokenization backend, just as we do for PyTorch‑based models. Alternatives for SentencePiece or MistralCommon‑backed tokenizers will be non‑default but supported. Image processors will now only exist with their fast variant, which depends on the torchvision backend.

Finally, we’re sunsetting our Flax/TensorFlow support in favor of focusing on PyTorch as the sole backend; however, we’re also working with partners in the JAX ecosystem to ensure compatibility between our models and that ecosystem.

“With its v5 release, Transformers is going all‑in on PyTorch. Transformers acts as a source of truth and foundation for modeling across the field; we've been working with the team to ensure good performance across the stack. We're excited to continue pushing for this in the future across training, inference, and deployment.”

— Matt White, Executive Director, PyTorch Foundation; GM of AI, Linux Foundation

Training

Training remains a big focus of the team as we head into v5: whereas previously we focused heavily on fine‑tuning rather than pre‑training/full‑training at scale, we’ve recently done significant work to improve our support for the latter as well.

Pre‑training at Scale

Supporting pre‑training required reworking model initialization, ensuring scalability with different parallelism paradigms, and shipping optimized kernels for both forward and backward passes.

Going forward, we’re excited to have extended compatibility with torchtitan, megatron, nanotron, and any other pre‑training tool that wishes to collaborate with us.

Fine‑tuning & Post‑training

We continue collaborating closely with all fine‑tuning tools in the Python ecosystem. We aim to keep providing model implementations compatible with Unsloth, Axolotl, LlamaFactory, TRL, and others in the PyTorch ecosystem; we are also working with tools such as MaxText in the JAX ecosystem to ensure good interoperability between their frameworks and transformers.

All fine‑tuning and post‑training tools can now rely on Transformers for model definitions, further enabling agentic use‑cases through OpenEnv or the Prime Environment Hub.

Inference

We’re putting a significant focus on inference for v5, with several paradigm changes: the introduction of specialized kernels, cleaner defaults, new APIs, and support for optimized inference engines.

Similarly to training, we’ve been packaging kernels so that they’re automatically used when your hardware and software permit it. If you haven’t heard of kernels before, see the Kernels documentation.

Alongside this effort, we ship two new APIs dedicated to inference:

-

Continuous batching and paged attention – already used internally for some time; we are finalizing the rough edges and writing usage guides.

-

transformers serve– a new Transformers‑specific serving system that deploys an OpenAI‑API‑compatible server.

We see this as a major step forward for use‑cases such as evaluation, where a great number of inference requests are made simultaneously. We don’t aim to replace dedicated inference engines (vLLM, SGLang, TensorRT LLM); instead, we aim to be perfectly inter‑compatible with them, as detailed in the next section.

“The Transformers backend in vLLM has been very enabling to get more architectures, like BERT and other encoders, available to more users. We've been working with the Transformers team to ensure many models are available across modalities with the best performance possible. This is just the start of our collaboration: we're happy to see the Transformers team will have this as a focus going into version 5.”

— Simon Mo, Harry Mellor at vLLM

“Standardization is key to accelerating AI innovation. Transformers v5 empowers the SGLang team to spend less time on model reimplementation and more time on kernel optimization. We look forward to building a more …”

— [continued in the original article]

0

Comments

Want to join the conversation?

Loading comments...