Cognibotics Helps Advance Motion Layer for AI-Guided Surgical Robotics in CAISA

•February 12, 2026

0

Why It Matters

A reliable motion layer is critical for translating AI insights into precise, repeatable robot actions, accelerating the path toward clinical AI‑assisted surgery.

Key Takeaways

- •Cognibotics supplies unified motion interface for AI robotics

- •CAISA testbed includes 350 m² simulated operating room

- •Over 3,000 hours of pediatric heart surgery video collected

- •Vinnova funds project with SEK 10 million through 2027

- •Industrial‑grade motion control ensures safe, repeatable instrument handling

Pulse Analysis

The motion layer that Cognibotics delivers acts as the nervous system for AI‑guided surgical robots, converting visual perception and planning outputs into smooth, deterministic movements. By abstracting multiple command pathways, the software lets researchers experiment with different AI models without re‑engineering low‑level robot code. This approach mirrors successful strategies in high‑speed warehousing and precision machining, where reliability and latency are paramount, and it sets a new benchmark for medical robotics integration.

CAISA’s infrastructure combines a massive 3,000‑hour video repository with a purpose‑built 350 m² testbed that replicates a full operating‑room environment. The dataset fuels deep‑learning models that recognize instruments, hands, and cardiac anatomy, while the testbed offers a controlled arena for safety validation using donated bodies. Backed by Vinnova’s SEK 10 million grant, the project brings together clinicians, academia, and robotics firms, ensuring that technical advances are grounded in real surgical workflows and regulatory considerations.

Beyond the immediate research goals, the CAISA demonstrator signals a broader shift toward AI‑augmented surgery. As motion control matures, manufacturers can offer modular, plug‑and‑play AI assistants that integrate with existing surgical robots, reducing development cycles and costs. This could accelerate adoption in high‑volume specialties, drive new revenue streams for robotics vendors, and ultimately improve patient outcomes by enhancing precision and consistency in complex procedures.

Cognibotics helps advance motion layer for AI-guided surgical robotics in CAISA

12 February 2026

Cognibotics is contributing its motion software to the Vinnova‑funded CAISA (Collaborative Artificial Intelligent Surgical Assistant) project as it enters the demonstrator phase.

In CAISA, Cognibotics provides the motion layer between AI‑based perception and path‑planning modules.

In contrast to using the standard robot controller, the Cognibotics solution enables the robot to be commanded in different ways as AI and planning modules require – supporting consistent, maintainable, yet dynamic instrument handling in a non‑clinical paediatric heart surgery research testbed.

From surgical data to motion demonstrator

CAISA is a collaboration between Region Skåne (Paediatric Heart Centre at Skåne University Hospital), Lund University, Cognibotics and Cobotic. The project is funded by Vinnova with SEK 10 million (approximately $1.1 million) and runs from September 2024 to August 2027.

In the initial phase, several key building blocks have been put in place:

1. Large‑scale surgical video dataset for model development

The Paediatric Heart Centre has collected a surgical video database of more than 3,000 hours. This data is used to train AI models that recognise and estimate the pose of instruments, hands, and cardiac anatomy.

2. Full‑scale testbed for validation

On the hospital campus, an innovation and testbed facility of approximately 350 m² includes a full operating‑room environment, with the possibility to work with donated bodies for research. This provides a controlled setting for evaluating robotics and safety functions before any clinical deployment is considered.

3. Clear requirement for a demonstrator

In the Vinnova project description, the creation, testing, and validation of a demonstrator in this dedicated test environment is an explicit goal. The project is now entering this demonstrator phase.

Cognibotics: Motion‑control layer for an AI‑guided assistant

Within CAISA, Cognibotics focuses on the motion layer that connects multiple “ways of commanding” the robot. Cognibotics provides a unified motion interface where perception, planning, and application logic can drive motion in a consistent, predictable way in the testbed environment.

By bringing experience from high‑precision industrial robots into the surgical research context, Cognibotics contributes methods for:

-

model‑based motion control with attention to environment constraints;

-

fine‑grained positioning in a confined workspace around the patient; and

-

motion behaviours that can support safe, repeatable interactions between human surgeon, instruments and robot.

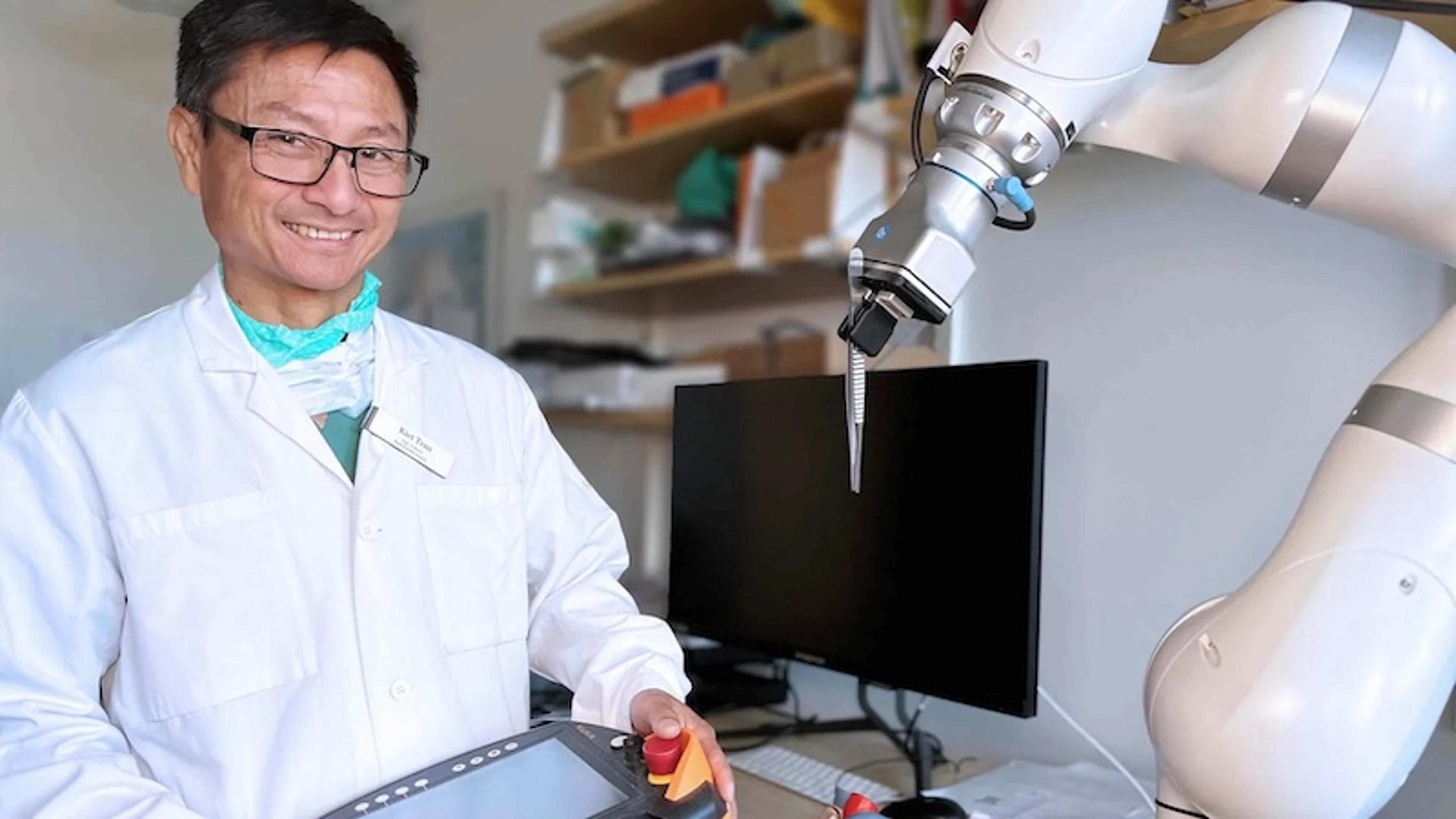

Phan‑Kiet Tran, senior consultant in paediatric heart surgery at Skåne University Hospital and associate professor at Lund University, says:

“In CAISA we are combining world‑class paediatric heart surgery, advanced AI and Cognibotics’ expertise in motion control.

The robot must not only ‘understand’ the situation, it also has to move instruments in a way that feels natural, safe and repeatable for the whole team. Cognibotics provides that industrial‑grade motion layer, which is essential if an AI assistant is to be used in real operating rooms.”

From industry to future surgical applications

Cognibotics’ motion technology is already used in demanding industrial settings such as high‑speed warehouse robots and precision machining. CAISA extends that expertise into the medical research domain, exploring how a similar motion‑control foundation can support future AI‑guided surgical assistants.

The demonstrator developed in CAISA is strictly a research platform in a non‑clinical environment. However, the project aims to build the technical and clinical understanding needed for future systems where AI, surgeons and robots work together more safely and efficiently.

0

Comments

Want to join the conversation?

Loading comments...