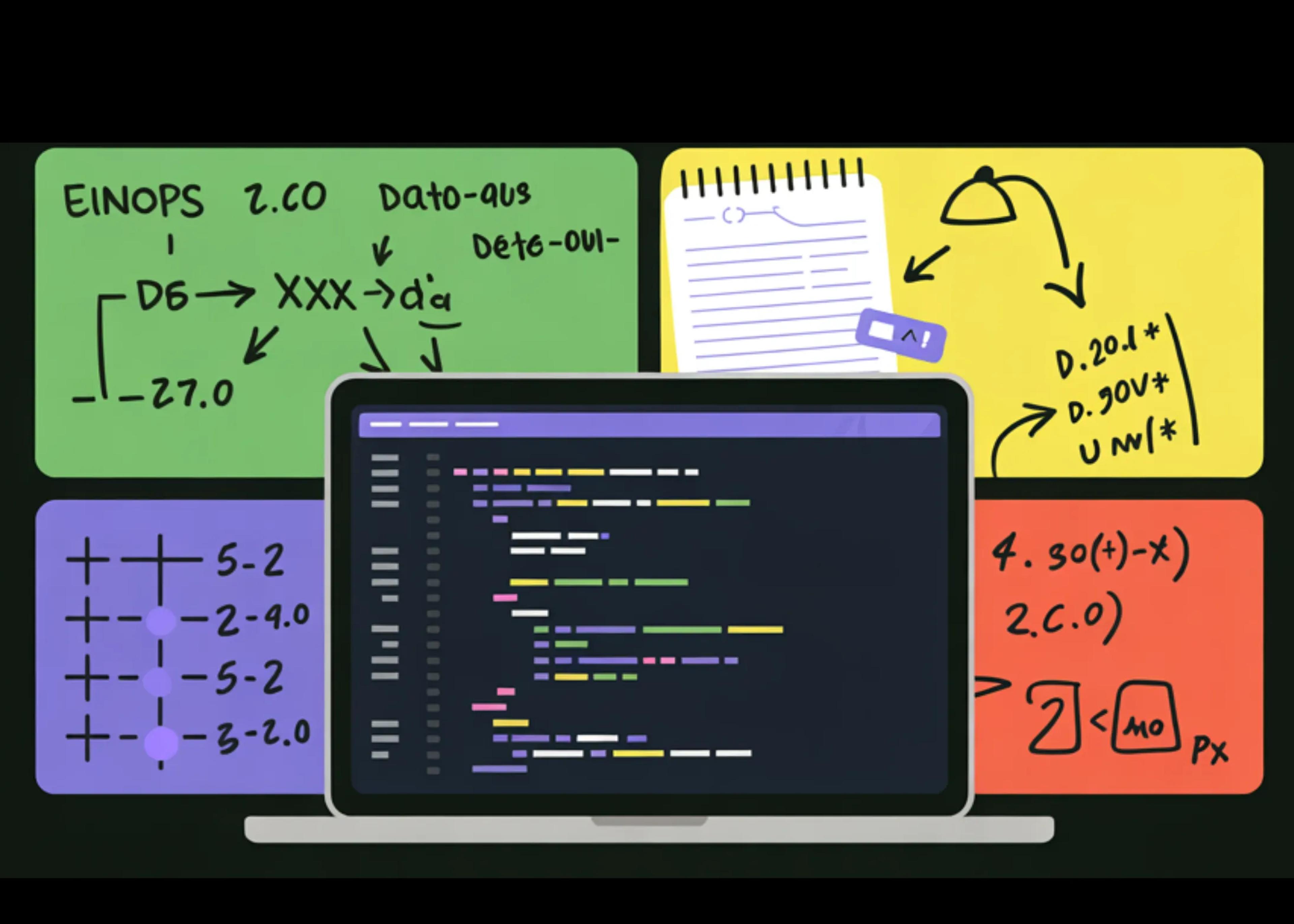

How to Design Complex Deep Learning Tensor Pipelines Using Einops with Vision, Attention, and Multimodal Examples

•February 10, 2026

0

Companies Mentioned

Why It Matters

Einops dramatically reduces development friction and shape‑related bugs, accelerating model prototyping and deployment in vision and multimodal AI systems.

Key Takeaways

- •Einops simplifies tensor reshaping, reducing bugs

- •Supports rearrange, reduce, repeat, einsum, pack/unpack

- •Enables clear vision patchification and attention pipelines

- •Integrates seamlessly with PyTorch modules and layers

- •Improves code readability and maintainability for multimodal models

Pulse Analysis

Einops has emerged as a lightweight domain‑specific language that bridges the gap between mathematical notation and practical code. By allowing developers to declare tensor rearrangements, reductions, and broadcasts in a single, expressive string, it eliminates the verbose indexing logic that traditionally plagues PyTorch scripts. This not only cuts down on lines of code but also introduces runtime shape checks, catching mismatches early in the development cycle and reducing costly debugging sessions.

In computer‑vision models, patchifying images into token sequences is a foundational step for Vision Transformers and related architectures. Using Einops’s rearrange function, developers can convert a batch of images into patches with a single declarative statement, ensuring that the spatial dimensions are correctly handled regardless of input size. The tutorial further demonstrates how the same syntax scales to multi‑head attention, where queries, keys, and values are split across heads and processed with einsum, preserving clarity while delivering the performance of native PyTorch operations.

Beyond vision, the tutorial highlights multimodal token packing, where class tokens, image embeddings, and text embeddings are merged into a unified tensor for joint processing. The pack and unpack utilities maintain a compact representation without sacrificing the ability to retrieve original segment shapes, a feature especially valuable in transformer‑based multimodal models. By embedding Einops layers directly into PyTorch modules, engineers can build modular, reusable components that are both easy to read and performant, positioning Einops as a strategic tool for modern AI development.

How to Design Complex Deep Learning Tensor Pipelines Using Einops with Vision, Attention, and Multimodal Examples

In this tutorial, we walk through advanced usage of Einops to express complex tensor transformations in a clear, readable, and mathematically precise way. We demonstrate how rearrange, reduce, repeat, einsum, and pack/unpack let us reshape, aggregate, and combine tensors without relying on error-prone manual dimension handling. We focus on real deep-learning patterns, such as vision patchification, multi-head attention, and multimodal token mixing, and show how einops serves as a compact tensor manipulation language that integrates naturally with PyTorch. Check out the FULL CODES here.

Copy CodeCopiedUse a different Browser

import sys, subprocess, textwrap, math, time

def pip_install(pkg: str):

subprocess.check_call(sys.executable, "-m", "pip", "install", "-q", pkg)

pip_install("einops")

pip_install("torch")

import torch

import torch.nn as nn

import torch.nn.functional as F

from einops import rearrange, reduce, repeat, einsum, pack, unpack

from einops.layers.torch import Rearrange, Reduce

torch.manual_seed(0)

device = "cuda" if torch.cuda.is_available() else "cpu"

print("Device:", device)

def section(title: str):

print("\n" + "=" * 90)

print(title)

print("=" * 90)

def show_shape(name, x):

print(f"{name:>18} shape = {tuple(x.shape)} dtype={x.dtype} device={x.device}")

We set up the execution environment and ensure all required dependencies are installed dynamically. We initialize PyTorch, einops, and utility helpers that standardize device selection and shape inspection. We also establish reusable printing utilities that help us track tensor shapes throughout the tutorial.

Copy CodeCopiedUse a different Browser

section("1) rearrange")

x = torch.randn(2, 3, 4, 5, device=device)

show_shape("x", x)

x_bhwc = rearrange(x, "b c h w -> b h w c")

show_shape("x_bhwc", x_bhwc)

x_split = rearrange(x, "b (g cg) h w -> b g cg h w", g=3)

show_shape("x_split", x_split)

x_tokens = rearrange(x, "b c h w -> b (h w) c")

show_shape("x_tokens", x_tokens)

y = torch.randn(2, 7, 11, 13, 17, device=device)

y2 = rearrange(y, "b ... c -> b c ...")

show_shape("y", y)

show_shape("y2", y2)

try:

_ = rearrange(torch.randn(2, 10, device=device), "b (h w) -> b h w", h=3)

except Exception as e:

print("Expected error (shape mismatch):", type(e).__name__, "-", str(e):140)

We demonstrate how we use rearrange to express complex reshaping and axis-reordering operations in a readable, declarative way. We show how to split, merge, and permute dimensions while preserving semantic clarity. We also intentionally trigger a shape error to illustrate how Einops enforces shape safety at runtime.

Copy CodeCopiedUse a different Browser

section("2) reduce")

imgs = torch.randn(8, 3, 64, 64, device=device)

show_shape("imgs", imgs)

gap = reduce(imgs, "b c h w -> b c", "mean")

show_shape("gap", gap)

pooled = reduce(imgs, "b c (h ph) (w pw) -> b c h w", "mean", ph=2, pw=2)

show_shape("pooled", pooled)

chmax = reduce(imgs, "b c h w -> b c", "max")

show_shape("chmax", chmax)

section("3) repeat")

vec = torch.randn(5, device=device)

show_shape("vec", vec)

vec_batched = repeat(vec, "d -> b d", b=4)

show_shape("vec_batched", vec_batched)

q = torch.randn(2, 32, device=device)

q_heads = repeat(q, "b d -> b heads d", heads=8)

show_shape("q_heads", q_heads)

We apply reduce and repeat to perform pooling, aggregation, and broadcasting operations without manual dimension handling. We compute global and local reductions directly within the transformation expression. We also show how repeating tensors across new dimensions simplifies batch and multi-head constructions.

Copy CodeCopiedUse a different Browser

section("4) patchify")

B, C, H, W = 4, 3, 32, 32

P = 8

img = torch.randn(B, C, H, W, device=device)

show_shape("img", img)

patches = rearrange(img, "b c (h p1) (w p2) -> b (h w) (p1 p2 c)", p1=P, p2=P)

show_shape("patches", patches)

img_rec = rearrange(

patches,

"b (h w) (p1 p2 c) -> b c (h p1) (w p2)",

h=H // P,

w=W // P,

p1=P,

p2=P,

c=C,

)

show_shape("img_rec", img_rec)

max_err = (img - img_rec).abs().max().item()

print("Reconstruction max abs error:", max_err)

assert max_err < 1e-6

section("5) attention")

B, T, D = 2, 64, 256

Hh = 8

Dh = D // Hh

x = torch.randn(B, T, D, device=device)

show_shape("x", x)

proj = nn.Linear(D, 3 * D, bias=False).to(device)

qkv = proj(x)

show_shape("qkv", qkv)

q, k, v = rearrange(qkv, "b t (three heads dh) -> three b heads t dh", three=3, heads=Hh, dh=Dh)

show_shape("q", q)

show_shape("k", k)

show_shape("v", v)

scale = Dh ** -0.5

attn_logits = einsum(q, k, "b h t dh, b h s dh -> b h t s") * scale

show_shape("attn_logits", attn_logits)

attn = attn_logits.softmax(dim=-1)

show_shape("attn", attn)

out = einsum(attn, v, "b h t s, b h s dh -> b h t dh")

show_shape("out (per-head)", out)

out_merged = rearrange(out, "b h t dh -> b t (h dh)")

show_shape("out_merged", out_merged)

We implement vision and attention mechanisms that are commonly found in modern deep learning models. We convert images into patch sequences and reconstruct them to verify reversibility and correctness. We then reshape projected tensors into a multi-head attention format and compute attention using einops.einsum for clarity and correctness.

Copy CodeCopiedUse a different Browser

section("6) pack unpack")

B, Cemb = 2, 128

class_token = torch.randn(B, 1, Cemb, device=device)

image_tokens = torch.randn(B, 196, Cemb, device=device)

text_tokens = torch.randn(B, 32, Cemb, device=device)

show_shape("class_token", class_token)

show_shape("image_tokens", image_tokens)

show_shape("text_tokens", text_tokens)

packed, ps = pack(class_token, image_tokens, text_tokens, "b * c")

show_shape("packed", packed)

print("packed_shapes (ps):", ps)

mixer = nn.Sequential(

nn.LayerNorm(Cemb),

nn.Linear(Cemb, 4 * Cemb),

nn.GELU(),

nn.Linear(4 * Cemb, Cemb),

).to(device)

mixed = mixer(packed)

show_shape("mixed", mixed)

class_out, image_out, text_out = unpack(mixed, ps, "b * c")

show_shape("class_out", class_out)

show_shape("image_out", image_out)

show_shape("text_out", text_out)

assert class_out.shape == class_token.shape

assert image_out.shape == image_tokens.shape

assert text_out.shape == text_tokens.shape

section("7) layers")

class PatchEmbed(nn.Module):

def __init__(self, in_channels=3, emb_dim=192, patch=8):

super().__init__()

self.patch = patch

self.to_patches = Rearrange("b c (h p1) (w p2) -> b (h w) (p1 p2 c)", p1=patch, p2=patch)

self.proj = nn.Linear(in_channels * patch * patch, emb_dim)

def forward(self, x):

x = self.to_patches(x)

return self.proj(x)

class SimpleVisionHead(nn.Module):

def __init__(self, emb_dim=192, num_classes=10):

super().__init__()

self.pool = Reduce("b t c -> b c", reduction="mean")

self.classifier = nn.Linear(emb_dim, num_classes)

def forward(self, tokens):

x = self.pool(tokens)

return self.classifier(x)

patch_embed = PatchEmbed(in_channels=3, emb_dim=192, patch=8).to(device)

head = SimpleVisionHead(emb_dim=192, num_classes=10).to(device)

imgs = torch.randn(4, 3, 32, 32, device=device)

tokens = patch_embed(imgs)

logits = head(tokens)

show_shape("tokens", tokens)

show_shape("logits", logits)

section("8) practical")

x = torch.randn(2, 32, 16, 16, device=device)

g = 8

xg = rearrange(x, "b (g cg) h w -> (b g) cg h w", g=g)

show_shape("x", x)

show_shape("xg", xg)

mean = reduce(xg, "bg cg h w -> bg 1 1 1", "mean")

var = reduce((xg - mean) ** 2, "bg cg h w -> bg 1 1 1", "mean")

xg_norm = (xg - mean) / torch.sqrt(var + 1e-5)

x_norm = rearrange(xg_norm, "(b g) cg h w -> b (g cg) h w", b=2, g=g)

show_shape("x_norm", x_norm)

z = torch.randn(3, 64, 20, 30, device=device)

z_flat = rearrange(z, "b c h w -> b c (h w)")

z_unflat = rearrange(z_flat, "b c (h w) -> b c h w", h=20, w=30)

assert (z - z_unflat).abs().max().item() < 1e-6

show_shape("z_flat", z_flat)

section("9) views")

a = torch.randn(2, 3, 4, 5, device=device)

b = rearrange(a, "b c h w -> b h w c")

print("a.is_contiguous():", a.is_contiguous())

print("b.is_contiguous():", b.is_contiguous())

print("b._base is a:", getattr(b, "_base", None) is a)

section("Done You now have reusable einops patterns for vision, attention, and multimodal token packing")

We demonstrate reversible token packing and unpacking for multimodal and transformer-style workflows. We integrate Einops layers directly into PyTorch modules to build clean, composable model components. We conclude by applying practical tensor grouping and normalization patterns that reinforce how einops simplifies real-world model engineering.

In conclusion, we established Einops as a practical and expressive foundation for modern deep-learning code. We showed that complex operations like attention reshaping, reversible token packing, and spatial pooling can be written in a way that is both safer and more readable than traditional tensor operations. With these patterns, we reduced cognitive overhead and minimized shape bugs. We wrote models that are easier to extend, debug, and reason about while remaining fully compatible with high-performance PyTorch workflows.

Check out the FULL CODES here. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

The post How to Design Complex Deep Learning Tensor Pipelines Using Einops with Vision, Attention, and Multimodal Examples appeared first on MarkTechPost.

0

Comments

Want to join the conversation?

Loading comments...