Samsung Shipping Fast and Small PCIe Gen5 Bus 4TB Mini-Gumstick Drive

•February 3, 2026

0

Companies Mentioned

Why It Matters

The PM9E1 provides the bandwidth and capacity needed to keep AI training pipelines moving, reducing data‑loading bottlenecks in compact, high‑performance systems. Its launch signals Samsung’s aggressive push into AI‑centric storage solutions, challenging rivals in a fast‑growing market.

Key Takeaways

- •4TB PM9E1 fits 2242 form factor, ideal for AI PCs

- •PCIe Gen5 delivers 14.5 GB/s read, 12.6 GB/s write

- •Random IOPS reach 2 M read, 2.64 M write

- •236‑layer Gen8 V‑NAND with dual‑sided DRAM on PCB

- •5 nm Presto controller adds SPDM authentication and tamper attestation

Pulse Analysis

AI model training and inference demand massive data streams, and storage has become the primary throttle in modern workstations. By moving to a PCIe Gen5 interface and packing a 4 TB capacity into a 22 × 42 mm M.2 2242 package, Samsung addresses both bandwidth and space constraints. The drive’s 236‑layer Gen 8 V‑NAND and dual‑sided DRAM architecture enable sequential reads of 14.5 GB/s and writes of 12.6 GB/s, while random I/O peaks at 2 M/2.64 M IOPS, delivering performance that rivals many desktop‑class SSDs in a fraction of the footprint.

The technical leap over Samsung’s own PM9A1 is stark: the older Gen4‑based 2 TB model topped out at 7 GB/s read and 5.2 GB/s write, half the throughput of the new Gen5 drive. This jump is not merely a numbers game; it translates into faster checkpointing for large language models and reduced latency when loading massive datasets. The dual‑sided PCB layout, while challenging for thermal and reliability engineering, showcases Samsung’s packaging expertise, allowing all critical components—NAND, DRAM, controller—to coexist on a miniature board without sacrificing endurance.

Beyond raw speed, the PM9E1 embeds SPDM‑based device authentication and firmware tamper attestation, aligning with the heightened security expectations of enterprise AI deployments. Coupled with a 5 nm Presto controller tuned for Nvidia CUDA and DGX Spark OS, the drive positions Samsung as a serious contender in the AI‑optimized storage niche. Competitors will need to match this blend of compact form factor, Gen5 performance, and built‑in security to stay relevant as AI workloads continue to scale.

Samsung shipping fast and small PCIe Gen5 bus 4TB mini-gumstick drive

February 2026

Samsung announced its PM9E1 gumstick drive in October 2024 with 512 GB, 1 TB, and 2 TB capacities. It is now shipping a 4 TB version, which the company says is a good fit for Nvidia DGX Spark AI desktop workstations.

The drive is an M.2 2242, dual‑sided, model optimized for space‑constrained AI PCs and high‑performance laptops. It is built using 1 Tb dies made from Samsung’s Gen 8 V‑NAND with 236 layers in TLC (3 bits/cell) format, on‑board DRAM on both sides, a NAND chip, a pseudo‑SLC cache, and four lanes of PCIe Gen5. Samsung reports up to 2 million random read IOPS and 2.64 million random write IOPS, with sequential read bandwidth of 14.5 GB/s and write bandwidth of 12.6 GB/s.

Samsung notes that the PM9E1 follows its earlier 2021‑era PM9A1, a PCIe Gen4 drive in M.2 2280 format with 2 TB capacity using 128‑layer Gen 6 V‑NAND and TLC technology. The PM9A1 delivered 1 million random read IOPS, 850 K write IOPS, and sequential read/write speeds of 7 GB/s and 5.2 GB/s, respectively—considerably slower because the Gen4 PCIe bus is half the speed of the Gen5 bus.

4 TB PM9E1 speeds vs. (2 TB) PM9A1 speeds

The 4 TB PM9E1 in its M.2 2242 format is more compact than the M.2 2280 format PM9E1 drives with 512 GB, 1 TB, and 2 TB capacities. A Samsung tech blog quotes Task Leader Sunki Yun:

“We decided to apply a smaller NAND package—originally planned for the V9 generation—to V8 as well. Thanks to close collaboration with the Package Development Team, we were able to accelerate development and move the project forward quickly. Because of the strict space limitations, we had to place all the components including NAND, DRAM, and the controller on both sides of a very small PCB. This brought additional challenges in PCB layout, BOM optimization, thermal management, and mechanical reliability.”

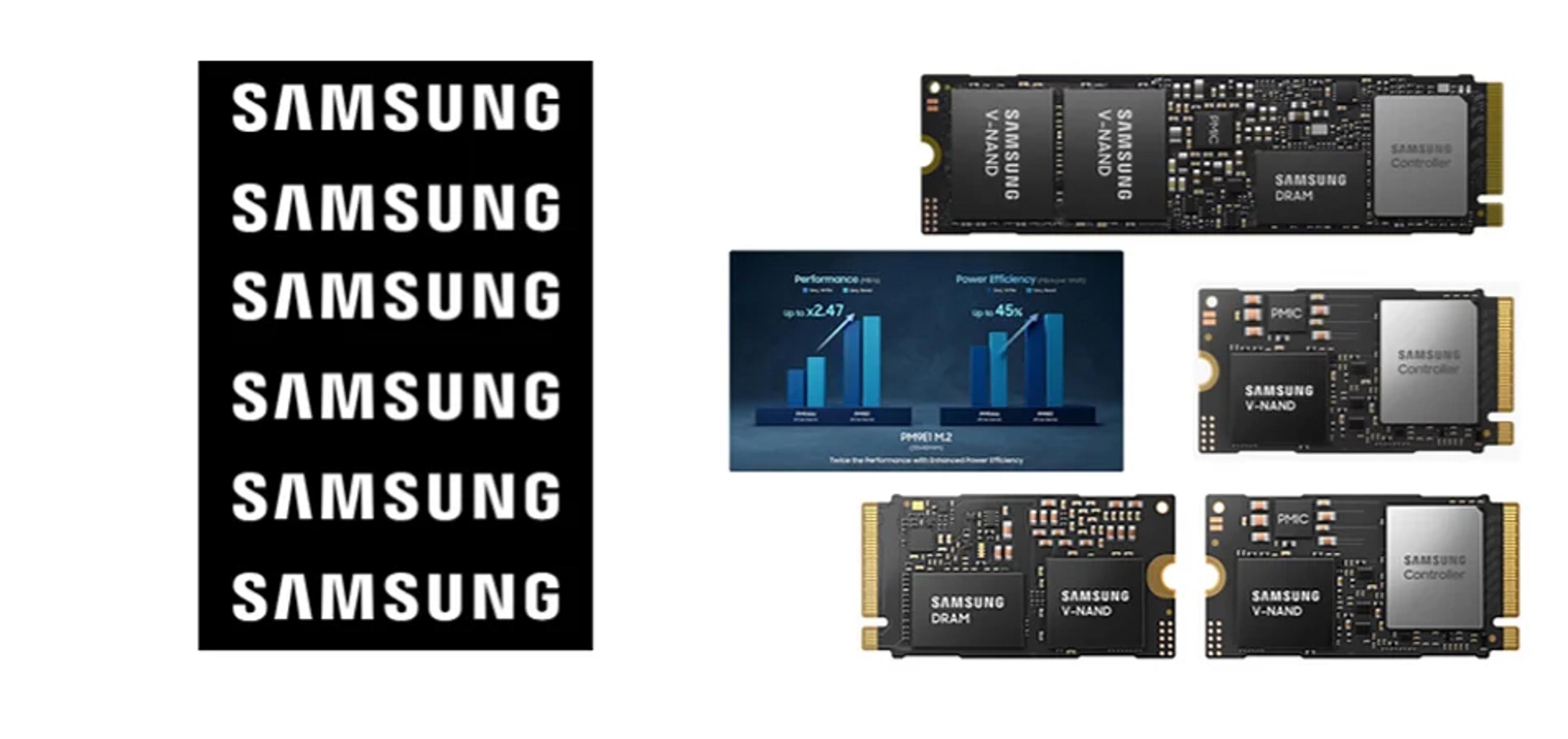

Samsung M.2 2280 format PM9E1 drive (top). M.2 2242 format 4 TB PM9E1 (middle) with both sides shown on the bottom.

According to Samsung, the drive includes Device Authentication and Firmware Tampering Attestation security features via the v1.2 Security Protocol and Data Model (SPDM). It uses an in‑house ‘Presto’ controller built on 5 nm Samsung Foundry technology, with firmware optimized for DGX Spark OS, Nvidia CUDA, and the overall AI user experience.

While detailed optimizations are not disclosed, the tech blog also quotes Alex Choi of Samsung’s Memory Products Planning team:

“When running large language models (LLMs), one of the most representative AI workloads, systems must handle extremely intensive data loading and training operations. During training in particular, checkpoint operations—which frequently save the model’s state—require very high sequential write performance.”

In essence, the sequential read and write speeds are the key, with Choi adding:

“These are crucial for quickly loading trained models and enabling fast, seamless inference and retraining.”

A Samsung blog discusses the 4 TB PM9E1 in more detail, highlighting its AI‑optimized PCIe Gen5 architecture and performance advantages.

0

Comments

Want to join the conversation?

Loading comments...