Exponentially Improved Multiphoton Interference Benchmarking Advances Quantum Technology Scalability

Why It Matters

Efficiently benchmarking photon indistinguishability removes a key bottleneck for scaling photonic quantum computers, accelerating hardware development and commercial adoption.

Exponentially Improved Multiphoton Interference Benchmarking Advances Quantum Technology Scalability

Accurate benchmarking of multiphoton interference

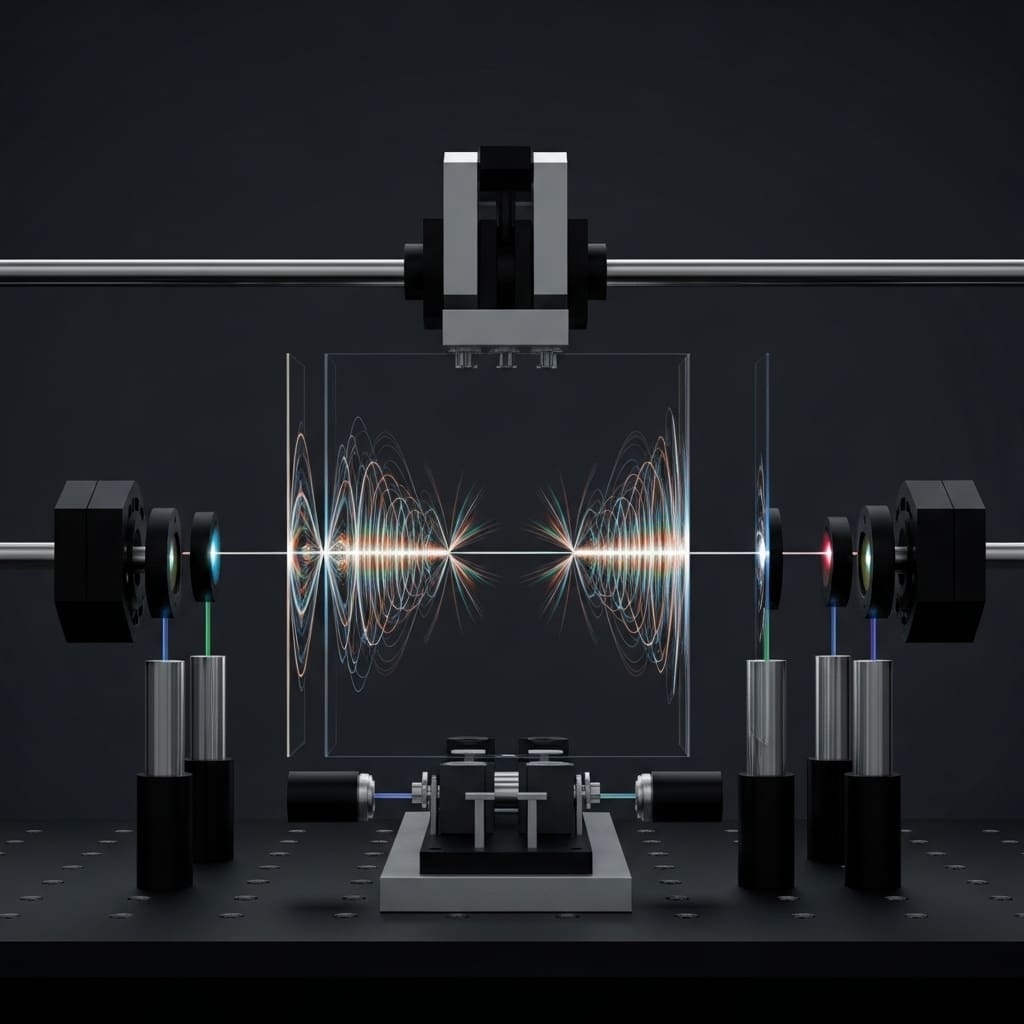

Rodrigo M. Sanz (Universitat Politècnica de València), Emilio Annoni (Centre for Nanosciences and Nanotechnology, Université Paris‑Saclay and Quandela), and Stephen C. Wein (Quandela) et al. present a new protocol that dramatically improves upon existing methods for assessing genuine n‑photon indistinguishability. Their research addresses the exponential increase in sample complexity that has previously hampered multi‑photon benchmarking, instead achieving constant complexity for prime numbers and sub‑polynomial scaling for others. By leveraging theorems relating distinguishability to the suppression laws of quantum Fourier‑transform interferometry, the team demonstrates an exponential improvement in efficiency and validates their approach on Quandela’s quantum processor. This establishes the first scalable method for multi‑photon indistinguishability, paving the way for advancements in current and near‑term quantum hardware.

Novel QFT Protocol Benchmarks Multi‑Photon Indistinguishability

Scientists have achieved a significant breakthrough in benchmarking multi‑photon indistinguishability, a critical capability for scaling photonic quantum technologies. The research team successfully developed a novel protocol leveraging the quantum Fourier transform (QFT) interferometer to measure genuine n‑photon indistinguishability (GI) with unprecedented efficiency. This new approach overcomes limitations inherent in existing methods, which suffer from exponentially increasing sample complexity as the number of photons increases. The study introduces new theorems that deepen understanding of the relationship between distinguishability and suppression laws within the QFT interferometer.

By exploiting the predictable suppression of specific output configurations for fully indistinguishable photons, and demonstrating a uniform population of these configurations when inputs are partially distinguishable, the team constructed a protocol with dramatically reduced computational demands. Experiments reveal that this QFT‑based protocol achieves constant sample complexity for estimating GI with prime numbers of photons, and sub‑polynomial scaling for all other cases, representing an exponential improvement over the current state‑of‑the‑art. Researchers rigorously proved the optimality of their protocol in numerous scenarios and validated its performance using Quandela’s reconfigurable photonic quantum processor. This experimental validation demonstrated a clear advantage in both runtime and precision when compared to existing cyclic integrated interferometer (CI) methods.

The team observed that even with small system sizes and without the need for photon‑number‑resolving detectors, the QFT protocol delivered faster results and more accurate GI measurements. This work establishes the first scalable method for quantifying multi‑photon indistinguishability, directly addressing a key bottleneck in the advancement of photonic quantum computing. The protocol is readily applicable to current and near‑term quantum hardware, paving the way for more complex and powerful quantum systems. By providing an efficient and reliable means of benchmarking multi‑photon interference, this research promises to accelerate the development of practical photonic quantum technologies and unlock their full potential.

Quantum Indistinguishability via Fourier Transform Post‑Selection

The research detailed a novel methodology for benchmarking multi‑photon indistinguishability, a critical requirement for scaling quantum technologies. Scientists addressed the exponential sample complexity inherent in existing genuine n‑photon indistinguishability (GI) estimation protocols by developing a technique based on the quantum Fourier transform (QFT) interferometer. This work builds upon established relationships between distinguishability and the suppression laws governing the QFT, revealing that partially distinguishable input states populate previously suppressed output configurations with uniform probability. The team engineered a protocol that leverages this insight, post‑selecting on these uniformly populated outcomes to directly estimate GI.

Crucially, this approach achieves constant sample complexity, O(1), for prime numbers of photons, representing an exponential improvement over the previous state‑of‑the‑art method which scaled as O(4ⁿ). For non‑prime photon numbers, the sample complexity scales sub‑polynomially, still demonstrating a significant advancement in efficiency. Researchers rigorously proved the optimality of their protocol in numerous scenarios, establishing a theoretical foundation for its effectiveness. Experiments were conducted on Quandela’s reconfigurable photonic quantum processor to validate the theoretical findings.

The study employed a system capable of manipulating up to four photons, and compared the performance of the QFT‑based protocol against the established cyclic integrated interferometer (CI) method. Results demonstrated a clear advantage in both runtime and precision, even at these relatively small system sizes and without the need for photon‑number‑resolving detectors. The research established a scalable method for quantifying multi‑photon indistinguishability, applicable to current and near‑term quantum hardware. The methodology hinges on decomposing an n‑photon state into a fully indistinguishable component weighted by the GI probability, alongside distinguishable partition states that are orthogonal to the fully indistinguishable state. By harnessing the unique properties of the QFT and carefully post‑selecting measurement outcomes, the team achieved a breakthrough in efficiently characterizing multi‑photon interference.

Efficient Benchmarking of Multi‑Photon Indistinguishability

Scientists achieved a breakthrough in benchmarking multi‑photon indistinguishability, a crucial capability for numerous quantum technologies. The research team developed a novel protocol leveraging the quantum Fourier transform interferometer (QFT) to assess genuine n‑photon indistinguishability (GI) with significantly improved efficiency. Experiments revealed a constant sample complexity for estimating GI for prime numbers, and sub‑polynomial scaling for all other cases, representing a substantial advancement over existing methods that suffer from exponential scaling with the number of photons. The study focused on quantifying the indistinguishability of photons, employing a protocol designed to post‑select specific outputs from a carefully engineered interferometer.

Researchers demonstrated that their approach requires a number of samples to estimate indistinguishability to a fixed precision that scales at most as a very slowly growing function of n, the number of photons. This was achieved by establishing a relationship between distinguishability and the suppression laws governing the QFT, building upon previous work highlighting the importance of zero‑transmission laws for fully indistinguishable inputs. Measurements confirm that the protocol effectively discriminates between fully indistinguishable and distinguishable photon states. Data shows the team successfully implemented their protocol on Quandela’s reconfigurable quantum processor, observing a clear advantage in both runtime and precision compared to state‑of‑the‑art techniques.

The work introduces a method for representing n‑photon states using mode‑assignment vectors, allowing for a detailed analysis of transition probabilities between input and output states. Specifically, the team derived a formula for calculating the transition probability for partition states with distinguishable registers, demonstrating how the probability is influenced by the effective scattering matrix of the interferometer. Tests prove the optimality of the protocol in many relevant scenarios, establishing the first scalable method for multi‑photon indistinguishability applicable to current and near‑term quantum hardware. The research establishes a framework for analyzing the suppression of output configurations based on the Q‑value, a mathematical function of the mode assignments, and leverages zero‑transmission laws to identify and suppress unwanted outcomes. Results demonstrate a significant reduction in the computational resources needed to verify the indistinguishability of multiple photons, paving the way for more complex and scalable quantum systems.

Reference

Comments

Want to join the conversation?

Loading comments...