Blog•Jan 21, 2026

AssetOpsBench: Bridging the Gap Between AI Agent Benchmarks and Industrial Reality

AssetOpsBench is a new benchmark that evaluates agentic AI in industrial asset‑lifecycle management using 2.3 M sensor points, 140+ curated scenarios, 4.2 K work orders and 53 structured failure modes. It scores agents across six qualitative dimensions—task completion, retrieval accuracy, result verification, sequence correctness, clarity and hallucination rate—providing richer feedback than traditional single‑metric tests. Early community trials show that even top models like GPT‑4.1 achieve only 68‑72 points, far below the 85‑point threshold needed for deployment, especially when multi‑agent coordination is required. The framework also delivers automated failure‑mode analysis via a trajectory‑level pipeline, helping developers pinpoint why agents falter without exposing sensitive data.

By Hugging Face

Blog•Jan 20, 2026

Differential Transformer V2

The Differential Transformer V2 (DIFF V2) introduces a differential attention operation that doubles query heads while keeping key‑value heads unchanged, eliminating the need for custom attention kernels. By projecting a per‑token, per‑head λ and applying a sigmoid‑scaled subtraction, DIFF V2 removes the...

By Hugging Face

Blog•Jan 15, 2026

Introducing OptiMind, a Research Model Designed for Optimization

Microsoft Research unveiled OptiMind, a specialized language model that converts natural‑language optimization problems into solver‑ready mathematical formulations. The model is released as an experimental offering on Hugging Face, allowing developers and researchers to test it directly in the platform’s playground. OptiMind...

By Hugging Face

Blog•Jan 15, 2026

Open Responses: What You Need to Know

Open Responses is an open‑source inference standard that extends OpenAI’s Responses API, aiming to replace the legacy Chat Completion format for agentic workloads. It unifies text, image, JSON, and video generation while enabling provider‑side tool execution and autonomous sub‑agent loops....

By Hugging Face

Blog•Jan 6, 2026

Small Yet Mighty: Improve Accuracy In Multimodal Search and Visual Document Retrieval with Llama Nemotron RAG Models

NVIDIA introduced two compact Llama Nemotron models—an image‑text embedding encoder and a cross‑encoder reranker—tailored for multimodal retrieval over visual documents. Both run on typical NVIDIA GPUs, emit a single dense vector per page, and integrate seamlessly with existing vector databases. Benchmarks...

By Hugging Face

Blog•Jan 5, 2026

Generalist Robot Policy Evaluation in Simulation with NVIDIA Isaac Lab-Arena and LeRobot

NVIDIA and Hugging Face have merged NVIDIA Isaac Lab‑Arena with the LeRobot EnvHub, creating an open‑source pipeline for evaluating vision‑language‑action (VLA) robot policies in simulation. The integration gives developers access to pre‑trained GR00T N models, a library of 250+ Lightwheel tasks, and...

By Hugging Face

Blog•Jan 5, 2026

Introducing Falcon H1R 7B

The Technology Innovation Institute unveiled Falcon H1R 7B, a decoder‑only 7‑billion‑parameter LLM that rivals much larger reasoning models. Leveraging a two‑stage pipeline of curated supervised fine‑tuning and reinforcement learning with the GRPO algorithm, the model excels on math, code, and general...

By Hugging Face

Blog•Jan 5, 2026

Introducing Falcon-H1-Arabic: Pushing the Boundaries of Arabic Language AI with Hybrid Architecture

Falcon‑H1‑Arabic introduces a family of 3B, 7B and 34B parameter models that merge Mamba state‑space modules with Transformer attention in a hybrid block design. The architecture expands context windows to 128K tokens for the 3B model and 256K tokens for...

By Hugging Face

Blog•Jan 5, 2026

NVIDIA Brings Agents to Life with DGX Spark and Reachy Mini

At CES 2026 NVIDIA demonstrated how its DGX Spark platform can power a personal AI assistant built on the Reachy Mini robot. Using open‑source Nemotron 3 Nano for reasoning and Nemotron Nano 2 VL for vision, the demo combined NVIDIA’s NeMo Agent Toolkit with ElevenLabs TTS to...

By Hugging Face

Blog•Dec 18, 2025

Tokenization in Transformers V5: Simpler, Clearer, and More Modular

Transformers v5 introduces a major redesign of tokenizers, consolidating each model’s tokenizer into a single, transparent file and exposing the full architecture—normalizer, pre‑tokenizer, model, post‑processor, and decoder—as inspectable properties. The library now defaults to a Rust‑based backend for speed, while...

By Hugging Face

Blog•Dec 17, 2025

The Open Evaluation Standard: Benchmarking NVIDIA Nemotron 3 Nano with NeMo Evaluator

NVIDIA released the Nemotron 3 Nano 30B A3B model alongside a fully open evaluation recipe built with the NeMo Evaluator library. The blog details how developers can reproduce the model‑card results, inspect structured logs, and run the same benchmarks on any inference endpoint. Published YAML...

By Hugging Face

Blog•Dec 11, 2025

New in llama.cpp: Model Management

The llama.cpp server now includes a router mode that enables dynamic loading, unloading, and switching among multiple LLM models without restarting the service. Models are auto‑discovered from the default cache or a user‑specified directory and are launched in separate processes,...

By Hugging Face

Blog•Dec 9, 2025

Apriel-1.6-15b-Thinker: Cost-Efficient Frontier Multimodal Performance

ServiceNow released Apriel-1.6-15B-Thinker, a 15‑billion‑parameter multimodal reasoning model that rivals the performance of models ten times larger. Built on the Apriel‑1.5 foundation, it boosts text and vision reasoning while cutting reasoning token usage by over 30%. Trained on NVIDIA GB200...

By Hugging Face

Blog•Dec 5, 2025

Introducing Swift-Huggingface: The Complete Swift Client for Hugging Face

Mattt announced swift‑huggingface, a new Swift package that delivers a full‑featured client for the Hugging Face Hub, slated to replace the existing HubApi in swift‑transformers. The library adds reliable, resumable downloads with progress tracking, a Python‑compatible cache, and a flexible...

By Hugging Face

Blog•Dec 4, 2025

DeepMath: A Lightweight Math Reasoning Agent with SmolAgents

DeepMath is a math‑reasoning agent built on the Qwen‑3‑4B Thinking model and fine‑tuned with Group Relative Policy Optimization (GRPO). It replaces verbose chain‑of‑thought text with tiny Python snippets that run in a sandboxed executor, then folds the results back into the...

By Hugging Face

Blog•Dec 4, 2025

We Got Claude to Fine-Tune an Open Source LLM

Anthropic’s Claude Code now leverages a new Hugging Face Skills plugin to fine‑tune open‑source large language models end‑to‑end. The skill generates training scripts, selects appropriate cloud GPUs, submits jobs to Hugging Face Jobs, monitors progress via Trackio, and pushes the finished model to the Hub....

By Hugging Face

Blog•Dec 2, 2025

Custom Policy Enforcement with Reasoning: Faster, Safer AI Applications

NVIDIA unveiled Nemotron Content Safety Reasoning, a model that blends dynamic policy reasoning with production‑grade latency. The system lets developers load natural‑language policies at inference time, enabling nuanced content moderation across e‑commerce, telecom, and healthcare use cases. It achieves low...

By Hugging Face

Social•Dec 1, 2025

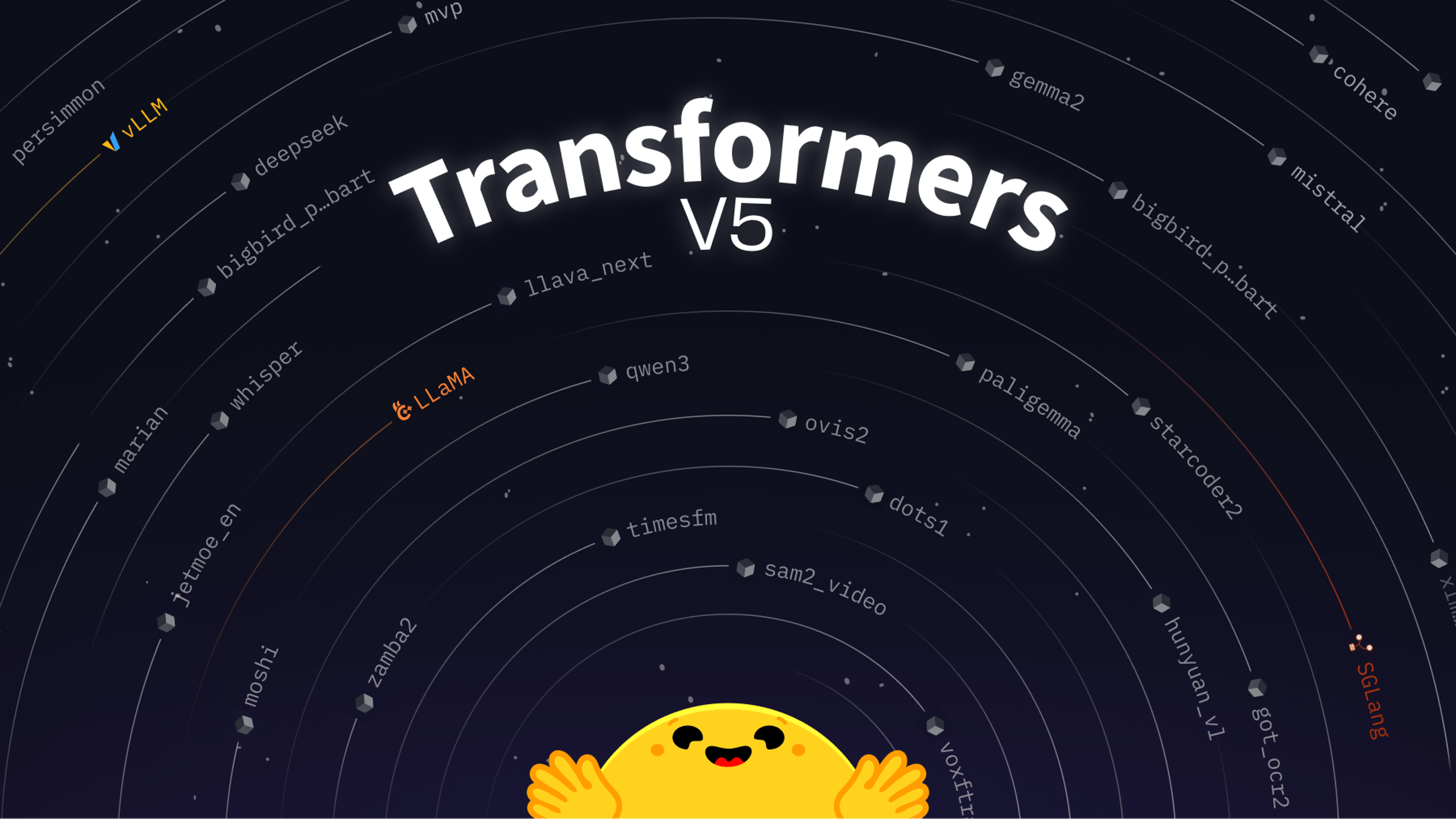

Transformers V5 RC Launches with Seamless Ecosystem Interoperability

Today we release the transformers version 5 RC! 🤗 With this, we enable e2e interoperability with our friends in ecosystem, ease up adding new models and simplify the library 🙌🏻 Read our blog to learn more: https://t.co/ysZW3btRgR https://t.co/PVKFRsH9Z2

By Hugging Face

Blog•Dec 1, 2025

SARLO-80: Worldwide Slant SAR Language Optic Dataset at 80 Cm Resolution

The SARLO‑80 dataset aggregates roughly 2,500 Umbra synthetic aperture radar (SAR) scenes and aligns them with high‑resolution optical imagery at a uniform 80 cm slant‑range resolution. Each SAR patch (1,024 × 1,024 px) is co‑registered with an optical counterpart and enriched with English natural‑language...

By Hugging Face

Blog•Dec 1, 2025

Transformers V5: Simple Model Definitions Powering the AI Ecosystem

Hugging Face released Transformers v5.0.0rc‑0, marking a five‑year jump from v4’s initial candidate. Daily pip installs have surged to over 3 million, pushing total installations past 1.2 billion. The library now supports more than 400 model architectures and hosts upwards of 750 k checkpoints,...

By Hugging Face

Social•Nov 30, 2025

New Profile Status Launched—Showcase Your Weekend Projects

we've recently shipped profile status 🫡 drop below what you've built on Hugging Face Hub this weekend!

By Hugging Face

Social•Nov 25, 2025

Flux.1-dev Ranks #2, Eagerly Awaiting Flux.2-dev

Flux.1-dev has been the second most liked model on Hugging Face just after Deepseek R1 so super excited to see the release of Flux.2-dev by @bfl_ml today! Download the weights or try the model (thanks to @fal) on @huggingface: https://t.co/kdmVlvdLZh Read the...

By Hugging Face

Blog•Nov 25, 2025

Diffusers Welcomes FLUX-2

The post introduces FLUX.2, Black Forest Labs' latest open‑source image generation model, highlighting its new architecture—including a single Mistral Small 3.1 text encoder and a re‑engineered DiT transformer with more single‑stream blocks and bias‑free layers. It offers practical guidance for...

By Hugging Face

Blog•Nov 24, 2025

Building Deep Research: How We Achieved State of the Art

Tavily’s team detailed how they rebuilt their deep‑research AI agent to achieve state‑of‑the‑art performance. By designing a lightweight agent harness, leveraging evolving model tool‑calling abilities, and integrating an advanced search tool, they streamlined orchestration and context handling. Their context‑engineering approach...

By Hugging Face

Blog•Nov 24, 2025

OVHcloud on Hugging Face Inference Providers 🔥

OVHcloud is now an official Inference Provider on the Hugging Face Hub, enabling serverless AI model calls directly from model pages. The service offers pay‑per‑token pricing starting at €0.04 per million tokens and runs on secure European data centers for...

By Hugging Face

Blog•Nov 21, 2025

Open ASR Leaderboard: Trends and Insights with New Multilingual & Long-Form Tracks

The post introduces new multilingual and long‑form tracks on the Open ASR Leaderboard, highlighting recent trends across 60+ models. It finds that Conformer encoders paired with LLM decoders achieve the best English accuracy, while CTC/TDT decoders offer the highest speed,...

By Hugging Face

Blog•Nov 21, 2025

20x Faster TRL Fine-Tuning with RapidFire AI

The post announces the integration of RapidFire AI with Hugging Face TRL, enabling up to 20× faster fine‑tuning and post‑training experiments by running multiple configurations concurrently on a single GPU through adaptive chunk‑based scheduling. It highlights drop‑in TRL wrappers, real‑time...

By Hugging Face

Social•Nov 20, 2025

Olmo 3 Launch Live: Join the Celebration

BOOM! Olmo 3 has just landed, join us in this livestream to learn more about the release 🤗💗

By Hugging Face

Blog•Nov 20, 2025

Introducing AnyLanguageModel: One API for Local and Remote LLMs on Apple Platforms

The post announces AnyLanguageModel, a Swift package that lets Apple developers swap the Foundation Models import for a unified API supporting local (Core ML, MLX, llama.cpp, Ollama) and cloud (OpenAI, Anthropic, Gemini, Hugging Face) LLM providers with minimal code changes. By...

By Hugging Face