Blog•Nov 19, 2025

Apriel-H1: The Surprising Key to Distilling Efficient Reasoning Models

ServiceNow‑AI converted its 15 B attention‑based reasoning model into a hybrid Mamba architecture, achieving 2.1× throughput with negligible quality loss. The breakthrough came from distilling on the teacher’s high‑quality SFT reasoning traces rather than generic pretraining data, and using reverse KL divergence as the loss. A three‑stage replacement process—identifying low‑impact layers, progressive Mamba substitution, and final SFT fine‑tuning—produced the Apriel‑H1‑15b‑Thinker‑SFT checkpoint, which outperforms the teacher on several reasoning benchmarks while cutting latency.

By Hugging Face

Blog•Nov 18, 2025

The Pharmome Map: A Comprehensive Public Dataset for Drug-Target Interaction Modeling

EvE Bio has released the “pharmome map,” the largest public drug‑target interaction dataset to date. It measures 1,397 FDA‑approved small‑molecule drugs against nuclear receptors, GPCRs, and protein kinases using standardized high‑throughput assays. The dataset is openly accessible, refreshed bi‑monthly, and...

By Hugging Face

Blog•Nov 17, 2025

Easily Build and Share ROCm Kernels with Hugging Face

The post explains how to build, test, and share ROCm‑compatible GPU kernels—specifically a high‑performance FP8 GEMM kernel—using Hugging Face’s kernel‑builder and kernels libraries, with a focus on reproducible builds via a flake.nix environment. It walks through project layout, configuration files...

By Hugging Face

Social•Nov 14, 2025

World's Largest AI Hackathon Launches with $20K Prizes

This might be the biggest AI hackathon ever: * >6,300 registrants * Runs for 2 weeks (Nov. 14-30) * Open to anyone, anywhere virtually * $20,000 in cash prizes + $3.5M+ in sponsor credits Hosted by @Anthropic and @Gradio, along with 10 sponsors, join...

By Hugging Face

Blog•Nov 13, 2025

Join the AMD Open Robotics Hackathon

AMD, Hugging Face, and Data Monsters are launching the AMD Open Robotics Hackathon with in‑person events in Tokyo (December 5‑7, 2025) and Paris (December 12‑14, 2025). Teams of up to four participants will complete two missions—setting up the LeRobot development environment and creating a...

By Hugging Face

Blog•Nov 13, 2025

Building for an Open Future - Our New Partnership with Google Cloud

The post announces a deeper partnership between Hugging Face and Google Cloud aimed at making it easier for companies to build and customize AI using open models. It highlights integrated services such as Vertex AI Model Garden, GKE, Cloud Run,...

By Hugging Face

Social•Nov 6, 2025

Essential Testing Tips for Your Gradio App

Best practices for testing your Gradio app 👇

By Hugging Face

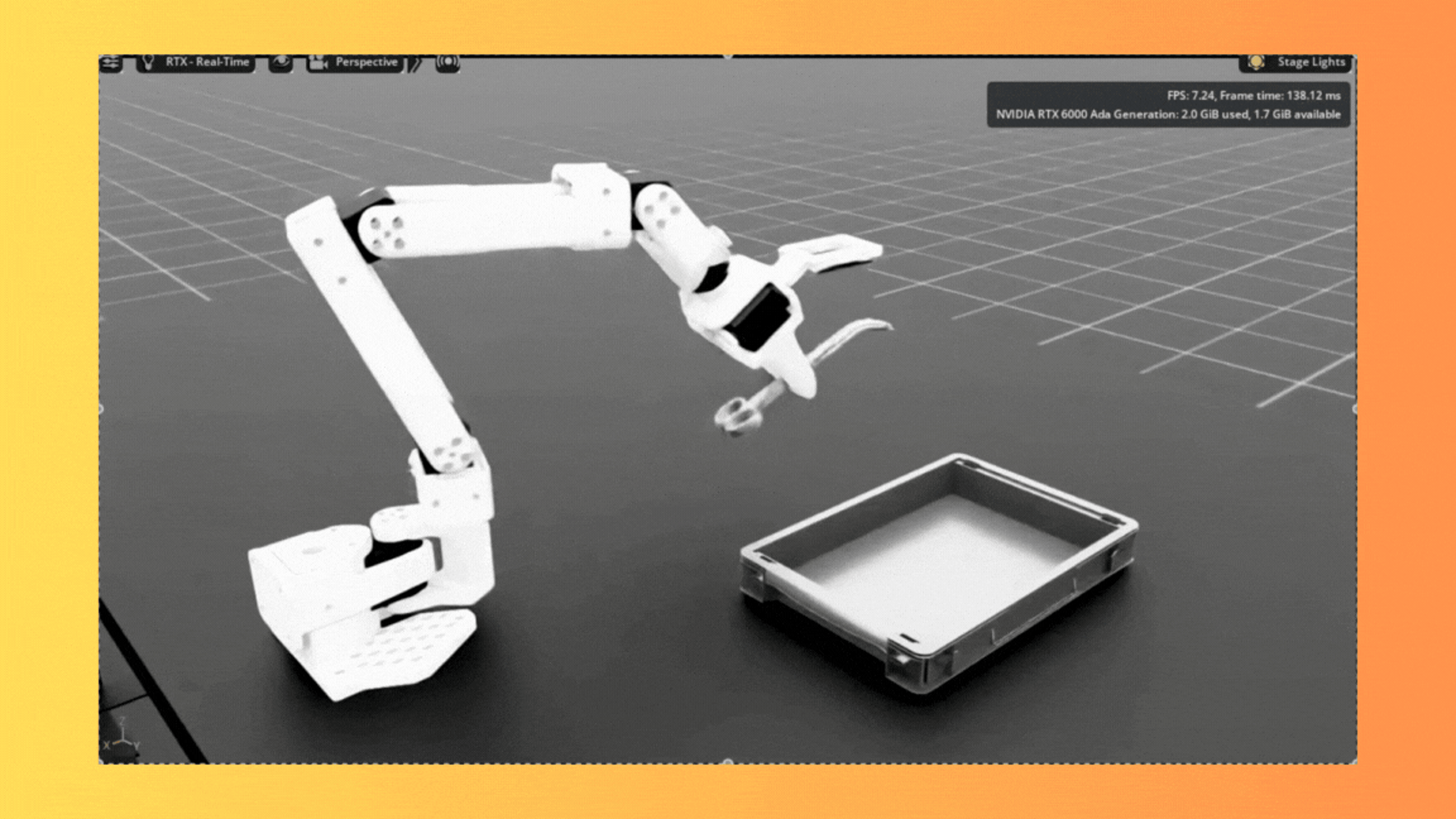

Blog•Oct 29, 2025

Building a Healthcare Robot From Simulation to Deployment with NVIDIA Isaac

NVIDIA released Isaac for Healthcare v0.4 with an end‑to‑end SO‑ARM starter workflow that takes developers from mixed simulation and real‑world data collection through fine‑tuning and real‑time deployment of surgical assistant robots. The pipeline fine‑tunes GR00T N1.5 on predominantly synthetic data...

By Hugging Face

Blog•Oct 28, 2025

Voice Cloning with Consent

Hugging Face researchers propose a “voice consent gate” to allow voice cloning only after an explicit, context‑specific spoken consent, and provide a demo and modular code to demonstrate the approach. The system combines autogenerated consent sentences, automatic speech recognition to...

By Hugging Face

Blog•Oct 27, 2025

Huggingface_hub v1.0: Five Years of Building the Foundation of Open Machine Learning

Hugging Face has released huggingface_hub v1.0 after five years of development, positioning the library as the mature Python backbone for its Hub and the broader ML ecosystem. The package now powers roughly 200,000 dependent libraries and provides access to more...

By Hugging Face

Blog•Oct 27, 2025

Streaming Datasets: 100x More Efficient

Hugging Face announced major backend improvements to its datasets and huggingface_hub libraries that make streaming multi‑TB training data far more efficient and reliable using the same load_dataset(streaming=True) API. Changes—including a persistent data files cache, optimized resolution logic, Parquet prefetching and...

By Hugging Face

Blog•Oct 24, 2025

LeRobot v0.4.0: Super Charging OSS Robotics Learning

Hugging Face released LeRobot v0.4.0, a major upgrade to its open‑source robotics stack that introduces LeRobotDataset v3.0 (chunked episodes and streaming to handle OXE‑scale datasets >400 GB), new VLA models (PI0.5 and GR00T N1.5), and a plugin system for easier...

By Hugging Face

Blog•Oct 23, 2025

Building the Open Agent Ecosystem Together: Introducing OpenEnv

Meta and Hugging Face launched the OpenEnv Hub, an open community repository and 0.1 RFC standard for “agentic environments” that package tools, APIs, credentials and execution context into secure, sandboxed interfaces for training and deployment. The Hub—seeded with initial environments...

By Hugging Face

Blog•Oct 22, 2025

Sentence Transformers Is Joining Hugging Face!

Hugging Face announced it is formally taking stewardship of the popular open‑source Sentence Transformers library — maintained by Tom Aarsen since 2023 — transitioning the project from TU Darmstadt’s UKP Lab to Hugging Face while retaining its Apache 2.0 license...

By Hugging Face

Blog•Oct 22, 2025

Hugging Face and VirusTotal Collaborate to Strengthen AI Security

Hugging Face has partnered with VirusTotal to continuously scan all 2.2M+ public model and dataset repositories on the Hugging Face Hub, checking file hashes against VirusTotal’s threat‑intelligence database to surface prior detections and related metadata. The integration retrieves status (clean...

By Hugging Face

Blog•Oct 21, 2025

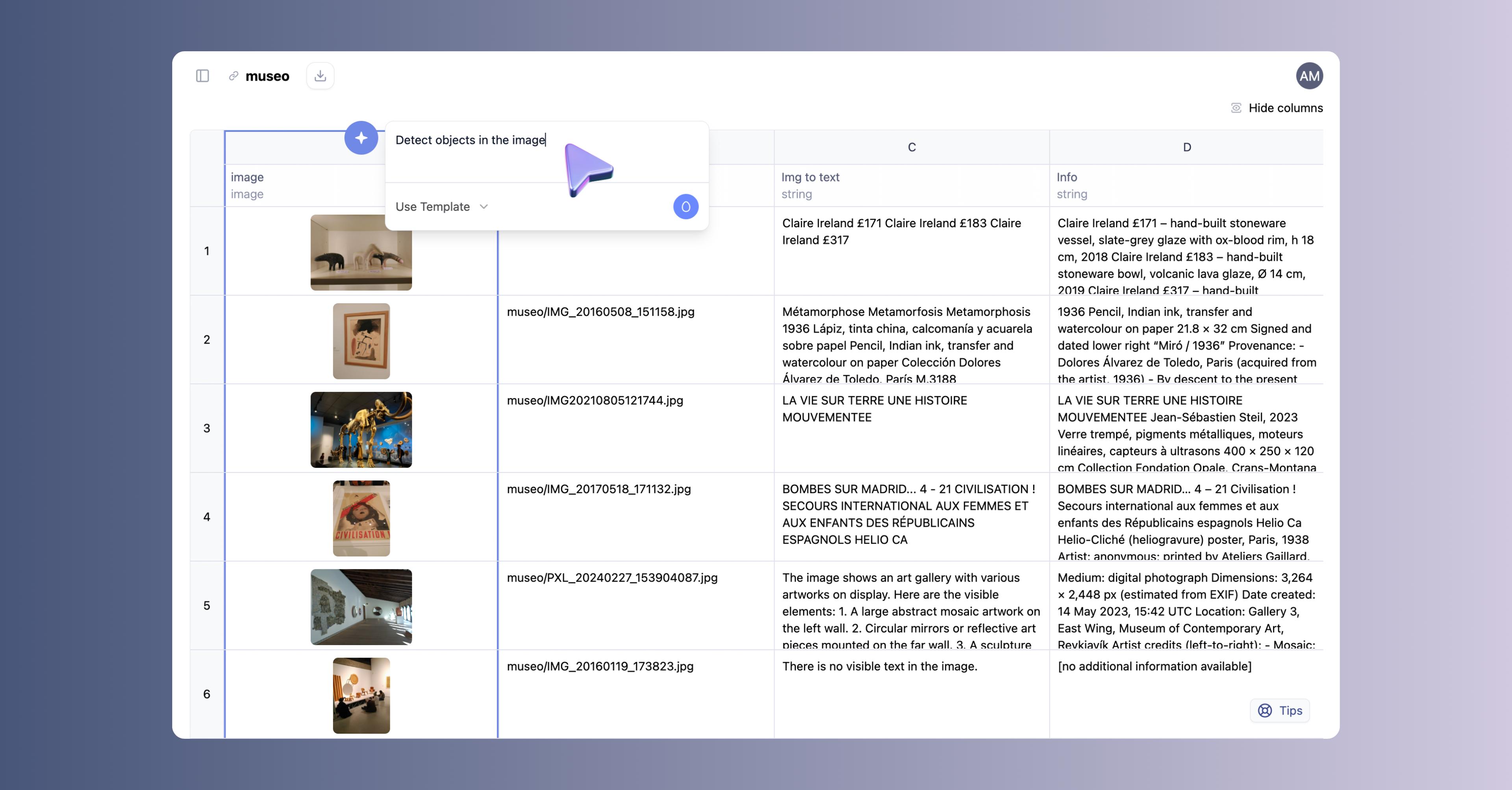

Unlock the Power of Images with AI Sheets

Hugging Face updated its open‑source AI Sheets tool to add full vision support, letting users view, extract, analyze, generate and edit images directly inside spreadsheets using thousands of open models via Inference Providers. The release enables tasks from receipt line‑item...

By Hugging Face

Blog•Oct 21, 2025

Supercharge Your OCR Pipelines with Open Models

A new practical guide maps the rapidly evolving landscape of open‑weight vision‑language OCR models, explaining when to fine‑tune versus use off‑the‑shelf models and how to move beyond basic transcription to multimodal retrieval and document QA. It compares leading open models...

By Hugging Face

Blog•Oct 16, 2025

Google Cloud C4 Brings a 70% TCO Improvement on GPT OSS with Intel and Hugging Face

Intel and Hugging Face benchmarked OpenAI’s GPT OSS on Google Cloud’s new C4 VMs (Intel Xeon 6/Granite Rapids) and report a 1.7x improvement in total cost of ownership versus prior-generation C3 instances. The C4 machines delivered 1.4x–1.7x better throughput per...

By Hugging Face

Blog•Oct 15, 2025

Get Your VLM Running in 3 Simple Steps on Intel CPUs

[Back to Articles](https://huggingface.co/blog) # Get your VLM running in 3 simple steps on Intel CPUs Published October 15, 2025 [Update on GitHub](https://github.com/huggingface/blog/blob/main/op...

By Hugging Face