Edge Data Centers Vs. Edge Devices: When to Use Each

•February 18, 2026

0

Companies Mentioned

Why It Matters

Choosing the right edge layer directly impacts latency, security compliance, and operational expense, shaping competitive advantage in IoT‑driven markets.

Key Takeaways

- •Edge data centers suit compute‑intensive, high‑throughput workloads

- •Edge devices excel for mobile, low‑latency processing

- •Hybrid approach balances performance, security, and cost

- •Manageability favors data centers; devices need fleet tools

- •TCO depends on scale, redundancy, and lifecycle

Pulse Analysis

Edge data centers have emerged as a strategic bridge between centralized clouds and the ultra‑local processing needs of modern applications. By colocating compute and storage near dense user clusters—such as stadiums, factories, or 5G nodes—these facilities deliver consistent low latency, high bandwidth, and hardened physical security. Their modular designs enable rapid deployment and scaling, making them ideal for video analytics, AI model serving, and regulated workloads that demand strict data‑sovereignty and audit trails.

Conversely, edge devices push intelligence directly to the point of generation. Smartphones, wearables, industrial gateways, and in‑store servers process data on‑device, reducing network hops and preserving privacy. Advances in efficient AI models and hardware acceleration have expanded on‑device capabilities, yet constraints around power, storage, and heterogeneous environments persist. Effective device strategies rely on robust fleet‑management platforms to handle updates, security patches, and telemetry across diverse hardware ecosystems.

The most resilient architectures combine both layers, assigning lightweight, real‑time tasks to edge devices while offloading heavier, compliance‑driven workloads to nearby edge data centers. This hybrid model balances operational overhead, capital expenditure, and performance, allowing enterprises to scale compute resources without sacrificing security or uptime. As 5G rollout accelerates and data‑driven services proliferate, aligning edge strategy with business objectives will be a decisive factor for digital transformation initiatives.

Edge Data Centers vs. Edge Devices: When to Use Each

Building an Edge Computing Strategy: Edge Data Centers vs. Edge Devices

Image: Siemens – A modular edge data center introduced by Siemens Smart Infrastructure, Cadolto Datacenter GmbH, and Legrand Data Center Solutions in June 2025.

Architecture Choices

-

Host workloads in an edge data center – a facility designed to run compute and store data close to end users and data sources.

-

Run workloads directly on individual edge devices – distributed hardware such as servers, gateways, sensors, smartphones, and wearables.

This article explains what each option entails, how they differ, and when to choose one over the other.

What Is Edge Networking and Why Use It?

Edge computing places data and compute physically closer to the workloads that produce or consume them – at the network’s “edge” – rather than in a distant centralized infrastructure.

Primary benefit: lower network latency, which improves responsiveness for time‑sensitive applications.

Additional benefits:

-

Bandwidth savings (by pre‑processing or filtering data locally)

-

Improved privacy (by keeping sensitive data local)

-

Resilience (by enabling continued operation during connectivity disruptions)

Related: Securing Edge Data Centers: Challenges and Solutions

“The edge” is contextual and relative. In one architecture a retail store’s local server may serve as the edge; in another, a neighborhood micro‑data center or mobile facility near a factory may serve as the edge. What counts as the edge depends on where your workloads sit, how they interact, and where latency, sovereignty, and resilience constraints apply.

What Is an Edge Data Center?

An edge data center is a purpose‑built facility, often smaller than conventional data centers and sometimes modular or mobile, deployed near concentrations of users or devices (dense urban areas, stadiums, industrial sites). These facilities provide many of the same advantages as traditional data centers, including:

- Consolidated operations, monitoring, and maintenance

Edge data centers deliver these benefits on a localized scale to minimize latency while preserving reliability and governance. They may be standalone, part of a colocation campus, integrated with telecom infrastructure (e.g., multi‑access edge computing near 5G), or modular containers placed on‑premises.

What Is an Edge Device?

An edge device is any hardware deployed near data sources or end users. Edge devices may reside in edge data centers, but they often operate standalone at the point of need.

Examples include:

-

In‑store servers that process transactions locally to speed up payment handling.

-

Industrial sensors and gateways that collect and preprocess telemetry.

-

Consumer devices – smartphones, tablets, wearables – that can store and process data on‑device, reducing round trips to centralized infrastructure.

Edge devices excel at distributing compute to where it’s needed most, often enabling real‑time or offline operation.

Related: Modular Data Centers: When They Work, and When They Don't

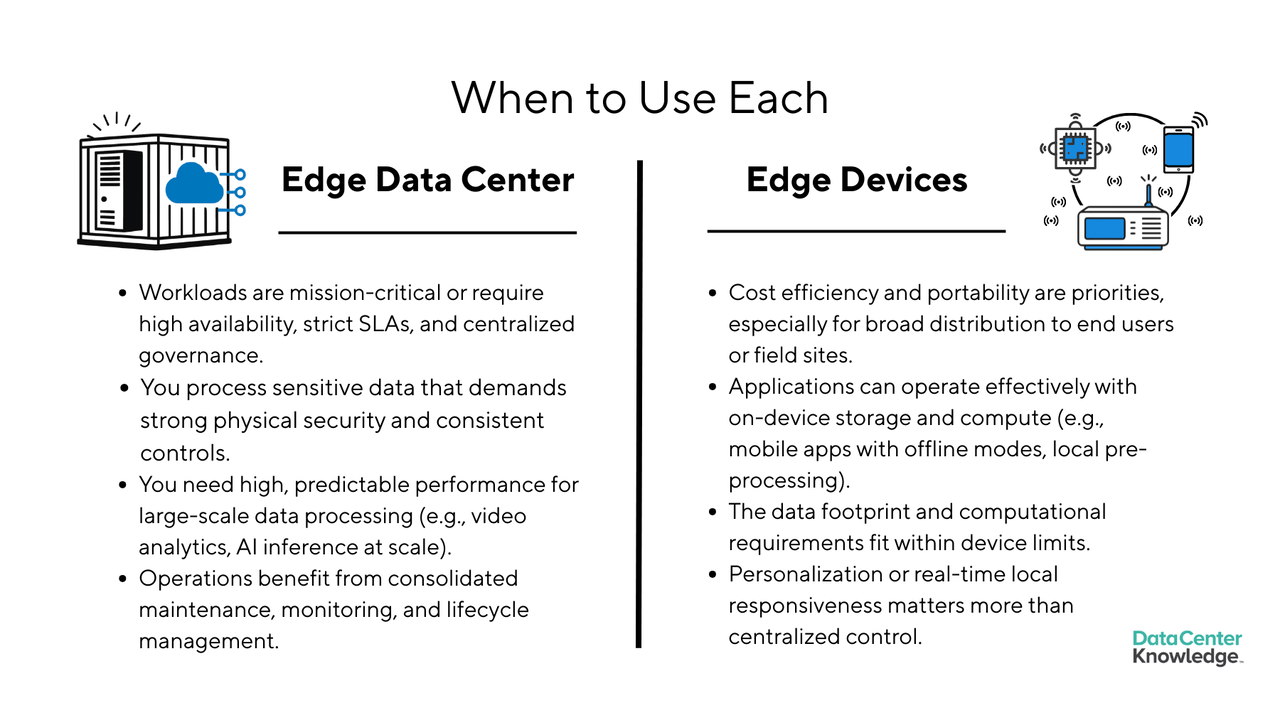

Key Trade‑offs: Edge Data Centers vs. Edge Devices

Performance and Scale

-

Edge data centers aggregate compute and storage, making them well‑suited for large‑scale or compute‑intensive workloads (e.g., video analytics, high‑throughput ingestion, model serving).

-

Edge devices are limited by hardware constraints (CPU/GPU power, storage, battery life), though advances in efficient models and quantization continue to expand on‑device capabilities.

Mobility and Placement Flexibility

-

Devices typically follow users or assets. If workloads and data sources move (e.g., vehicles, field teams), device‑based processing preserves low latency wherever they are.

-

Edge data centers are fixed to specific sites—ideal when traffic is concentrated but less adaptable for moving assets.

Related: Data Centers in Space: Separating Fact from Science Fiction

Security, Compliance, and Data Governance

-

Edge data centers provide controlled physical environments, access logging, and standardized security measures—preferable for highly sensitive data, regulated workloads, or strict chain‑of‑custody requirements.

-

Edge devices often lack robust physical safeguards and can be lost or tampered with, making them riskier for mission‑critical workloads that demand consistent protection and audited access.

Reliability and Resilience

-

Facilities deliver redundancy for power, cooling, and networks, plus remote‑hands support and monitoring—better for workloads that require predictable uptime.

-

Devices are more vulnerable to battery drain, intermittent connectivity, and environmental factors.

Manageability and Operations

-

Edge data centers centralize deployment, patching, observability, and incident response.

-

Devices introduce heterogeneity (different hardware models, OS versions, connectivity types) and require fleet management, secure update mechanisms, and configuration‑drift control. Larger, more diverse fleets increase operational overhead.

Cost and TCO

-

Data centers involve site selection, leases or capital outlay, installation, energy, and ongoing operations, but they amortize well at scale and simplify governance.

-

Devices can be inexpensive—or even free when consumer‑owned—but incur ongoing costs for fleet management, connectivity, replacement cycles, and specialized ruggedized hardware when needed. Evaluate costs holistically: capex, opex, energy, networking, lifecycle, and compliance.

A Practical Middle Path: Hybrid Edge

Many organizations adopt a layered approach:

-

Edge devices handle lightweight processing for immediate responsiveness (filtering, caching, local inference).

-

Edge data centers take on heavier, sensitive, or coordinated tasks.

Combining both layers often yields the best mix of performance, resilience, and operational efficiency.

| Consideration | Edge Data Centers | Edge Devices |

|---|---|---|

| Cost Model | Higher upfront and operational costs. | Lower per‑device cost; may use existing user‑owned hardware. |

| Security | Strong physical controls and standardized hardening. | Variable; limited physical protections on consumer devices. |

| Reliability | Designed for redundancy and uptime. | More prone to failures from power, connectivity, and wear. |

| Scalability | Centralized scaling and resource pooling. | Scales by distributing devices; heterogeneity adds complexity. |

| Manageability | Easier to govern with consistent policies. | Diverse hardware/OS versions complicate updates and monitoring. |

| Portability | Fixed or semi‑fixed locations. | Highly portable; can be used with users or embedded in assets. |

| Performance | High throughput and consistent latency. | Performance varies by device capabilities and network conditions. |

0

Comments

Want to join the conversation?

Loading comments...